Luce acknowledged that the United States could not police the whole world or attempt to impose democratic institutions on all of mankind. Nonetheless, “the world of the 20th century,” he wrote, “if it is to come to life in any nobility of health and vigor, must be to a significant degree an American Century.” The essay called on all Americans “to accept wholeheartedly our duty and our opportunity as the most powerful and vital nation in the world and in consequence to exert upon the world the full impact of our influence, for such purposes as we see fit and by such measures as we see fit.”

Japan’s attack on Pearl Harbor propelled the United States wholeheartedly onto the international stage Luce believed it was destined to dominate, and the ringing title of his cri de coeur became a staple of patriotic Cold War and post-Cold War rhetoric. Central to this appeal was the affirmation of a virtuous calling. Luce’s essay singled out almost every professed ideal that would become a staple of wartime and Cold War propaganda: freedom, democracy, equality of opportunity, self-reliance and independence, cooperation, justice, charity—all coupled with a vision of economic abundance inspired by “our magnificent industrial products, our technical skills.” In present-day patriotic incantations, this is referred to as “American exceptionalism.”

Clearly, the number and deadliness of global conflicts have indeed declined since World War II. This so-called postwar peace was, and still is, however, saturated in blood and wracked with suffering.

The other, harder side of America’s manifest destiny was, of course, muscularity. Power. Possessing absolute and never-ending superiority in developing and deploying the world’s most advanced and destructive arsenal of war. Luce did not dwell on this dimension of “internationalism” in his famous essay, but once the world war had been entered and won, he became its fervent apostle—an outspoken advocate of “liberating” China from its new communist rulers, taking over from the beleaguered French colonial military in Vietnam, turning both the Korean and Vietnam conflicts from “limited wars” into opportunities for a wider virtuous war against and in China, and pursuing the rollback of the Iron Curtain with “tactical atomic weapons.” As Luce’s incisive biographer Alan Brinkley documents, at one point Luce even mulled the possibility of “plastering Russia with 500 (or 1,000) A bombs”—a terrifying scenario, but one that the keepers of the US nuclear arsenal actually mapped out in expansive and appalling detail in the 1950s and 1960s, before Luce’s death in 1967.

The “American Century” catchphrase is hyperbole, the slogan never more than a myth, a fantasy, a delusion. Military victory in any traditional sense was largely a chimera after World War II. The so-called Pax Americana itself was riddled with conflict and oppression and egregious betrayals of the professed catechism of American values. At the same time, postwar US hegemony obviously never extended to more than a portion of the globe. Much that took place in the world, including disorder and mayhem, was beyond America’s control.

Yet, not unreasonably, Luce’s catchphrase persists. The 21st-century world may be chaotic, with violence erupting from innumerable sources and causes, but the United States does remain the planet’s “sole superpower.” The myth of exceptionalism still holds most Americans in its thrall. US hegemony, however frayed at the edges, continues to be taken for granted in ruling circles, and not only in Washington. And Pentagon planners still emphatically define their mission as “full-spectrum dominance” globally.

Washington’s commitment to modernizing its nuclear arsenal rather than focusing on achieving the thoroughgoing abolition of nuclear weapons has proven unshakable. So has the country’s almost religious devotion to leading the way in developing and deploying ever more “smart” and sophisticated conventional weapons of mass destruction.

Welcome to Henry Luce’s—and America’s—violent century, even if thus far it’s lasted only 75 years. The question is just what to make of it these days.

Counting the Dead

We live in times of bewildering violence. In 2013, the chairman of the Joint Chiefs of Staff told a Senate committee that the world is “more dangerous than it has ever been.” Statisticians, however, tell a different story: that war and lethal conflict have declined steadily, significantly, even precipitously since World War II.

Much mainstream scholarship now endorses the declinists. In his influential 2011 book, The Better Angels of Our Nature: Why Violence Has Declined, Harvard psychologist Steven Pinker adopted the labels “the Long Peace” for the four-plus decades of the Cold War (1945-1991), and “the New Peace” for the post-Cold War years to the present. In that book, as well as in post-publication articles, postings, and interviews, he has taken the doomsayers to task. The statistics suggest, he declares, that “today we may be living in the most peaceable era in our species’s existence.”

Clearly, the number and deadliness of global conflicts have indeed declined since World War II. This so-called postwar peace was, and still is, however, saturated in blood and wracked with suffering.

It is reasonable to argue that total war-related fatalities during the Cold War decades were lower than in the six years of World War II (1939-1945) and certainly far less than the toll for the 20th century’s two world wars combined. It is also undeniable that overall death tolls have declined further since then. The five most devastating intrastate or interstate conflicts of the postwar decades—in China, Korea, Vietnam, Afghanistan, and between Iran and Iraq—took place during the Cold War. So did a majority of the most deadly politicides, or political mass killings, and genocides: in the Soviet Union, China (again), Yugoslavia, North Korea, North Vietnam, Sudan, Nigeria, Indonesia, Pakistan-Bangladesh, Ethiopia, Angola, Mozambique, and Cambodia, among other countries. The end of the Cold War certainly did not signal the end of such atrocities (as witness Rwanda, the Congo, and the implosion of Syria). As with major wars, however, the trajectory has been downward.

Unsurprisingly, the declinist argument celebrates the Cold War as less violent than the global conflicts that preceded it, and the decades that followed as statistically less violent than the Cold War. But what motivates the sanitizing of these years, now amounting to three-quarters of a century, with the label “peace”? The answer lies largely in a fixation on major powers. The great Cold War antagonists, the United States and the Soviet Union, bristling with their nuclear arsenals, never came to blows. Indeed, wars between major powers or developed states have become (in Pinker’s words) “all but obsolete.” There has been no World War III, nor is there likely to be.

Such upbeat quantification invites complacent forms of self-congratulation. (How comparatively virtuous we mortals have become!) In the United States, where we-won-the-Cold-War sentiment still runs strong, the relative decline in global violence after 1945 is commonly attributed to the wisdom, virtue, and firepower of US “peacekeeping.” In hawkish circles, nuclear deterrence—the Cold War’s MAD (mutually assured destruction) doctrine that was described early on as a “delicate balance of terror”—is still canonized as an enlightened policy that prevented catastrophic global conflict.

What Doesn’t Get Counted

Branding the long postwar era as an epoch of relative peace is disingenuous, and not just because it deflects attention from the significant death and agony that actually did occur and still does. It also obscures the degree to which the United States bears responsibility for contributing to, rather than impeding, militarization and mayhem after 1945. Ceaseless US-led transformations of the instruments of mass destruction—and the provocative global impact of this technological obsession—are by and large ignored.

Continuities in American-style “warfighting” (a popular Pentagon word) such as heavy reliance on airpower and other forms of brute force are downplayed. So is US support for repressive foreign regimes, as well as the destabilizing impact of many of the nation’s overt and covert overseas interventions. The more subtle and insidious dimension of postwar US militarization—namely, the violence done to civil society by funneling resources into a gargantuan, intrusive, and ever-expanding national security state—goes largely unaddressed in arguments fixated on numerical declines in violence since World War II.

Beyond this, trying to quantify war, conflict, and devastation poses daunting methodological challenges. Data advanced in support of the decline-of-violence argument is dense and often compelling, and derives from a range of respectable sources. Still, it must be kept in mind that the precise quantification of death and violence is almost always impossible. When a source offers fairly exact estimates of something like “war-related excess deaths,” you usually are dealing with investigators deficient in humility and imagination.

If the overall incidence of violence, including 21st-century terrorism, is relatively low compared to earlier global threats and conflicts, why has the United States responded by becoming an increasingly militarized, secretive, unaccountable, and intrusive “national security state”?

Take, for example, World War II, about which countless tens of thousands of studies have been written. Estimates of total “war-related” deaths from that global conflict range from roughly 50 million to more than 80 million. One explanation for such variation is the sheer chaos of armed violence. Another is what the counters choose to count and how they count it. Battle deaths of uniformed combatants are easiest to determine, especially on the winning side. Military bureaucrats can be relied upon to keep careful records of their own killed-in-action—but not, of course, of the enemy they kill. War-related civilian fatalities are even more difficult to assess, although—as in World War II—they commonly are far greater than deaths in combat.

Does the data source go beyond so-called battle-related collateral damage to include deaths caused by war-related famine and disease? Does it take into account deaths that may have occurred long after the conflict itself was over (as from radiation poisoning after Hiroshima and Nagasaki, or from the US use of Agent Orange in the Vietnam War)? The difficulty of assessing the toll of civil, tribal, ethnic, and religious conflicts with any exactitude is obvious.

Concentrating on fatalities and their averred downward trajectory also draws attention away from broader humanitarian catastrophes. In mid-2015, for instance, the Office of the United Nations High Commissioner for Refugees reported that the number of individuals “forcibly displaced worldwide as a result of persecution, conflict, generalized violence, or human rights violations” had surpassed 60 million and was the highest level recorded since World War II and its immediate aftermath. Roughly two-thirds of these men, women, and children were displaced inside their own countries. The remainder were refugees, and over half of these refugees were children.

Here, then, is a trend line intimately connected to global violence that is not heading downward. In 1996, the UN’s estimate was that there were 37.3 million forcibly displaced individuals on the planet. Twenty years later, as 2015 ended, this had risen to 65.3 million—a 75% increase over the last two post-Cold War decades that the declinist literature refers to as the “new peace.”

Other disasters inflicted on civilians are less visible than uprooted populations. Harsh conflict-related economic sanctions, which often cripple hygiene and healthcare systems and may precipitate a sharp spike in infant mortality, usually do not find a place in itemizations of military violence. US-led UN sanctions imposed against Iraq for 13 years beginning in 1990 in conjunction with the first Gulf War are a stark example of this. An account published in the New York Times Magazine in July 2003 accepted the fact that “at least several hundred thousand children who could reasonably have been expected to live died before their fifth birthday.” And after all-out wars, who counts the maimed, or the orphans and widows, or those the Japanese in the wake of World War II referred to as the “elderly orphaned”—parents bereft of their children?

Figures and tables, moreover, can only hint at the psychological and social violence suffered by combatants and noncombatants alike. It has been suggested, for instance, that 1 in 6 people in areas afflicted by war may suffer from mental disorder (as opposed to 1 in 10 in normal times). Even where American military personnel are concerned, trauma did not become a serious focus of concern until 1980, seven years after the US retreat from Vietnam, when post-traumatic stress disorder (PTSD) was officially recognized as a mental-health issue.

In 2008, a massive sampling study of 1.64 million US troops deployed to Afghanistan and Iraq between October 2001 and October 2007 estimated “that approximately 300,000 individuals currently suffer from PTSD or major depression and that 320,000 individuals experienced a probable TBI [traumatic brain injury] during deployment.” As these wars dragged on, the numbers naturally increased. To extend the ramifications of such data to wider circles of family and community—or, indeed, to populations traumatized by violence worldwide—defies statistical enumeration.

Terror Counts and Terror Fears

Largely unmeasurable, too, is violence in a different register: the damage that war, conflict, militarization, and plain existential fear inflict upon civil society and democratic practice. This is true everywhere but has been especially conspicuous in the United States since Washington launched its “global war on terror” in response to the attacks of September 11, 2001.

Here, numbers are perversely provocative, for the lives claimed in 21st-century terrorist incidents can be interpreted as confirming the decline-in-violence argument. From 2000 through 2014, according to the widely cited Global Terrorism Index, “more than 61,000 incidents of terrorism claiming over 140,000 lives have been recorded.” Including September 11th, countries in the West experienced less than 5% of these incidents and 3% of the deaths. The Chicago Project on Security and Terrorism, another minutely documented tabulation based on combing global media reports in many languages, puts the number of suicide bombings from 2000 through 2015 at 4,787 attacks in more than 40 countries, resulting in 47,274 deaths.

These atrocities are incontestably horrendous and alarming. Grim as they are, however, the numbers themselves are comparatively low when set against earlier conflicts. For specialists in World War II, the “140,000 lives” estimate carries an almost eerie resonance, since this is the rough figure usually accepted for the death toll from a single act of terror bombing, the atomic bomb dropped on Hiroshima. The tally is also low compared to contemporary deaths from other causes. Globally, for example, more than 400,000 people are murdered annually. In the United States, the danger of being killed by falling objects or lightning is at least as great as the threat from Islamist militants.

This leaves us with a perplexing question: If the overall incidence of violence, including 21st-century terrorism, is relatively low compared to earlier global threats and conflicts, why has the United States responded by becoming an increasingly militarized, secretive, unaccountable, and intrusive “national security state”? Is it really possible that a patchwork of non-state adversaries that do not possess massive firepower or follow traditional rules of engagement has, as the chairman of the Joint Chiefs of Staff declared in 2013, made the world more threatening than ever?

For those who do not believe this to be the case, possible explanations for the accelerating militarization of the United States come from many directions. Paranoia may be part of the American DNA—or, indeed, hardwired into the human species. Or perhaps the anticommunist hysteria of the Cold War simply metastasized into a post-9/11 pathological fear of terrorism. Machiavellian fear-mongering certainly enters the picture, led by conservative and neoconservative civilian and military officials of the national security state, along with opportunistic politicians and war profiteers of the usual sort. Cultural critics predictably point an accusing finger as well at the mass media’s addiction to sensationalism and catastrophe, now intensified by the proliferation of digital social media.

To all this must be added the peculiar psychological burden of being a “superpower” and, from the 1990s on, the planet’s “sole superpower”—a situation in which “credibility” is measured mainly in terms of massive cutting-edge military might. It might be argued that this mindset helped “contain Communism” during the Cold War and provides a sense of security to US allies. What it has not done is ensure victory in actual war, although not for want of trying. With some exceptions (Grenada, Panama, the brief 1991 Gulf War, and the Balkans), the US military has not tasted victory since World War II—Korea, Vietnam, and recent and current conflicts in the Greater Middle East being boldface examples of this failure. This, however, has had no impact on the hubris attached to superpower status. Brute force remains the ultimate measure of credibility.

The traditional American way of war has tended to emphasize the “three Ds” (defeat, destroy, devastate). Since 1996, the Pentagon’s proclaimed mission is to maintain “full-spectrum dominance” in every domain (land, sea, air, space, and information) and, in practice, in every accessible part of the world. The Air Force Global Strike Command, activated in 2009 and responsible for managing two-thirds of the US nuclear arsenal, typically publicizes its readiness for “Global Strike… Any Target, Any Time.”

In 2015, the Department of Defense acknowledged maintaining 4,855 physical “sites”—meaning bases ranging in size from huge contained communities to tiny installations—of which 587 were located overseas in 42 foreign countries. An unofficial investigation that includes small and sometimes impermanent facilities puts the number at around 800 in 80 countries. Over the course of 2015, to cite yet another example of the overwhelming nature of America’s global presence, elite US special operations forces were deployed to around 150 countries, and Washington provided assistance in arming and training security forces in an even larger number of nations.

America’s overseas bases reflect, in part, an enduring inheritance from World War II and the Korean War. The majority of these sites are located in Germany (181), Japan (122), and South Korea (83) and were retained after their original mission of containing communism disappeared with the end of the Cold War. Deployment of elite special operations forces is also a Cold War legacy (exemplified most famously by the Army’s “Green Berets” in Vietnam) that expanded after the demise of the Soviet Union. Dispatching covert missions to three-quarters of the world’s nations, however, is largely a product of the war on terror.

Many of these present-day undertakings require maintaining overseas “lily pad” facilities that are small, temporary, and unpublicized. And many, moreover, are integrated with covert CIA “black operations.” Combating terror involves practicing terror—including, since 2002, an expanding campaign of targeted assassinations by unmanned drones. For the moment, this latest mode of killing remains dominated by the CIA and the US military (with the United Kingdom and Israel following some distance behind).

Counting Nukes

The “delicate balance of terror” that characterized nuclear strategy during the Cold War has not disappeared. Rather, it has been reconfigured. The US and Soviet arsenals that reached a peak of insanity in the 1980s have been reduced by about two-thirds—a praiseworthy accomplishment but one that still leaves the world with around 15,400 nuclear weapons as of January 2016, 93% of them in US and Russian hands. Close to 2,000 of the latter on each side are still actively deployed on missiles or at bases with operational forces.

This downsizing, in other words, has not removed the wherewithal to destroy the Earth as we know it many times over. Such destruction could come about indirectly as well as directly, with even a relatively “modest” nuclear exchange between, say, India and Pakistan triggering a cataclysmic climate shift—a “nuclear winter”—that could result in massive global starvation and death. Nor does the fact that seven additional nations now possess nuclear weapons (and more than 40 others are deemed “nuclear weapons capable”) mean that “deterrence” has been enhanced. The future use of nuclear weapons, whether by deliberate decision or by accident, remains an ominous possibility. That threat is intensified by the possibility that nonstate terrorists may somehow obtain and use nuclear devices.

What is striking at this moment in history is that paranoia couched as strategic realism continues to guide US nuclear policy and, following America’s lead, that of the other nuclear powers. As announced by the Obama administration in 2014, the potential for nuclear violence is to be “modernized.” In concrete terms, this translates as a 30-year project that will cost the United States an estimated $1 trillion (not including the usual future cost overruns for producing such weapons), perfect a new arsenal of “smart” and smaller nuclear weapons, and extensively refurbish the existing delivery “triad” of long-range manned bombers, nuclear-armed submarines, and land-based intercontinental ballistic missiles carrying nuclear warheads.

Creating a capacity for violence greater than the world has ever seen is costly—and remunerative.

Nuclear modernization, of course, is but a small portion of the full spectrum of American might—a military machine so massive that it inspired President Barack Obama to speak with unusual emphasis in his State of the Union address in January 2016. “The United States of America is the most powerful nation on Earth,” he declared. “Period. Period. It’s not even close. It’s not even close. It’s not even close. We spend more on our military than the next eight nations combined.”

Official budgetary expenditures and projections provide a snapshot of this enormous military machine, but here again numbers can be misleading. Thus, the “base budget” for defense announced in early 2016 for fiscal year 2017 amounts to roughly $600 billion, but this falls far short of what the actual outlay will be. When all other discretionary military- and defense-related costs are taken into account—nuclear maintenance and modernization, the “war budget” that pays for so-called overseas contingency operations like military engagements in the Greater Middle East, “black budgets” that fund intelligence operations by agencies including the CIA and the National Security Agency, appropriations for secret high-tech military activities, “veterans affairs” costs (including disability payments), military aid to other countries, huge interest costs on the military-related part of the national debt, and so on—the actual total annual expenditure is close to $1 trillion.

Such stratospheric numbers defy easy comprehension, but one does not need training in statistics to bring them closer to home. Simple arithmetic suffices. The projected bill for just the 30-year nuclear modernization agenda comes to over $90 million a day, or almost $4 million an hour. The $1 trillion price tag for maintaining the nation’s status as “the most powerful nation on Earth” for a single year amounts to roughly $2.74 billion a day, over $114 million an hour.

Creating a capacity for violence greater than the world has ever seen is costly—and remunerative.

So an era of a “new peace”? Think again. We’re only three-quarters of the way through America’s violent century and there’s more to come.

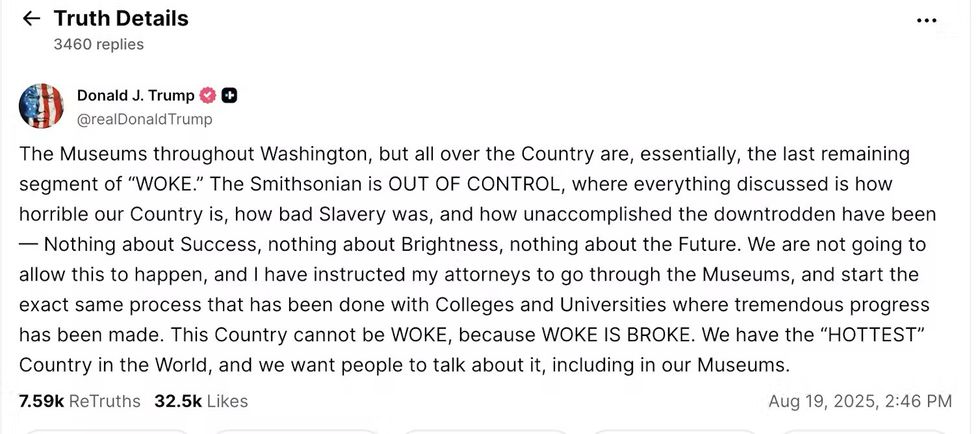

A screenshot is shown of President Donald Trump’s August 19, 2025 Truth Social post about the Smithsonian.

A screenshot is shown of President Donald Trump’s August 19, 2025 Truth Social post about the Smithsonian.  As part of efforts to purge references to gay people, US Defense Secretary Pete Hegseth has ordered the removal of gay rights advocate Harvey Milk’s name from a Navy ship. (Photo: Screenshot/Military.com)

As part of efforts to purge references to gay people, US Defense Secretary Pete Hegseth has ordered the removal of gay rights advocate Harvey Milk’s name from a Navy ship. (Photo: Screenshot/Military.com)