As CNBC reported last month, more than 1,000 people signed a petition calling on Port Washington officials to obtain voter approval before entering into the deal, but the Common Council and a review board went ahead with creating a Tax Incremental District for the project without public input. The data center still requires other approvals to officially move forward.

"We will not continue to be silenced and ignored while our beautiful and pristine city is taken away from us and handed over to a corporation intent on extracting as many resources as they can regardless of the impact on the people who live here," said Le Jeune. "Most leaders would have tabled the issue after receiving public input and providing sufficient notice. But you did nothing, and you laughed about it."

Le Jeune spoke for her allotted three minutes and went slightly over the time limit. She then chanted, "Recall, recall, recall!" at members of the Common Council as other community members applauded.

Police Chief Kevin Hingiss then approached Le Jeune while she was sitting in her seat, listening to the next speaker, and asked her to leave.

She refused, and another officer approached her before a chaotic scene broke out.

City officials had told attendees not to speak out of order during the meeting, and Le Jeune acknowledged that she and others had spoken out of turn at times.

But she told the Milwaukee Journal Sentinel that she had been surprised by the police officers' demand that she leave, and by the eventual violence of the incident, with officers physically removing her from her seat and dragging her and two other people across the floor.

The two other residents had approached Le Jeune to protest the officers' actions.

"I never expected something like that to happen in a meeting. It was very strange," she told the Journal Sentinel. "Suddenly this police chief showed up in front of me, and all I was thinking was: 'Wait, what is going on? Why is he interrupting her speech? ... It felt like [police] were kind of primed tonight to pounce."

State Sen. Chris Larson (D-7) said that "police should not be allowed to violently detain a person who is nonviolently exercising their free speech. This used to be something all Americans agreed on."

William Walter, executive director of Our Wisconsin Revolution, filmed the arrest and told ABC News affiliate WISN, "I've never seen a response like that in my life."

"What I did see was a lot of members of the Port Washington community who are really frustrated that they're being ignored and they're being dismissed by their elected officials," he said.

AI data centers, he added, "will impact you. They'll impact your friends, your family, your neighbors, your parents, your children. These are the kinds of things that are going to be dictating the future of Wisconsin, not just for the next couple of years but for the next decade, the next 50 years."

After Le Jeune's arrest, another resident, Dawn Stacey, denounced the Common Council members for allowing the aggressive arrest.

"We have so many people who have these concerns about this data center," said Stacey. “Are we being heard by the Common Council? No we’re not. Instead of being heard we have people being dragged out of the room.”

“For democracy to thrive, we need to have respect between public servants and the people who they serve," she added.

Vantage has distributed flyers in Port Washington, which has a population of 17,000, promising residents 330 full-time jobs after construction. But as CNBC reported, "Data centers don’t tend to create a lot of long-lasting jobs."

Another project in Mount Pleasant, Wisconsin hired 3,000 construction workers and foresees 500 employees, while McKinsey said a data center it is planning would need 1,500 people for construction but only around 50 for "steady-state operations."

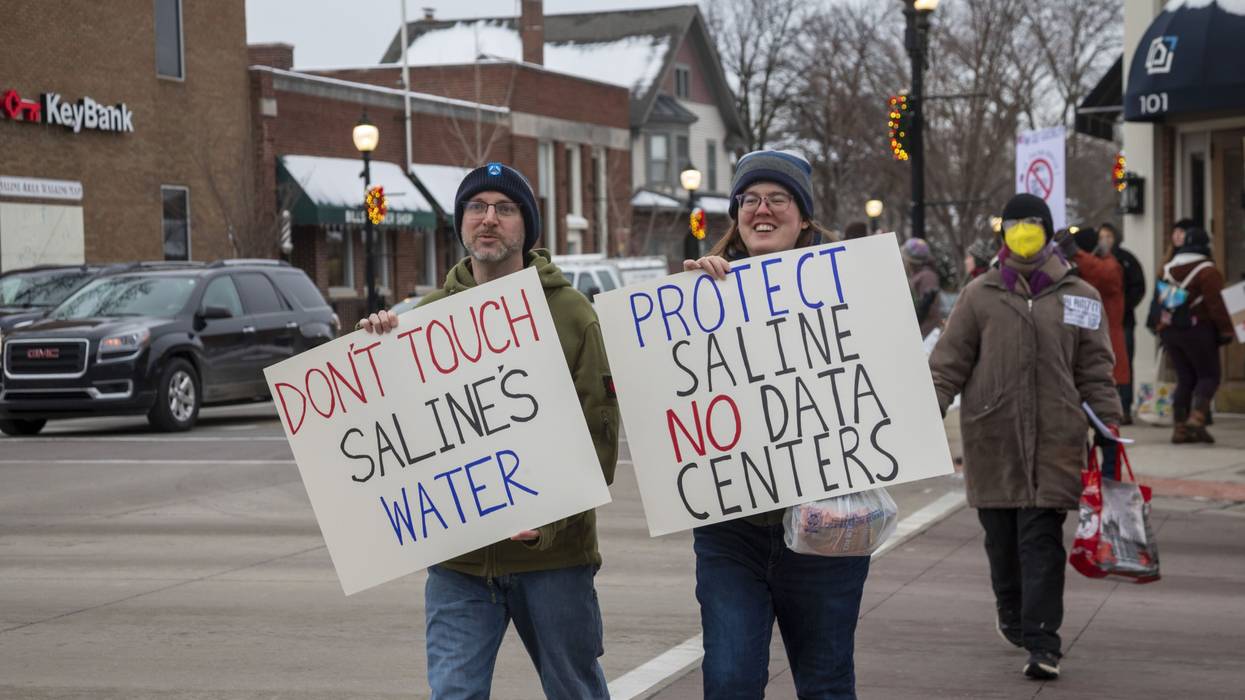

Residents in Port Washington have also raised concerns about the data center's impact on the environment, including through its water use, the potential for exploding utility prices for residents, and the overall purpose of advancing AI.

As Common Dreams reported Thursday, the development of data centers has caused a rapid surge in consumers' electricity bills, with costs rising more than 250% in just five years. Vantage has claimed its center will run on 70% renewable energy, but more than half of the electricity used to power data center campuses so far has come from fossil fuels, raising concerns that the expansion of the facilities will worsen the climate emergency.

A recent Morning Consult poll found that a rapidly growing number of Americans support a ban on AI data centers in their surrounding areas—41% said they would support a ban in the survey taken in late November, compared to 37% in October.