Previously Hinton had said he saw a 10% chance of that happening.

"We've never had to deal with things more intelligent than ourselves before," Hinton explained. "And how many examples do you know of a more intelligent thing being controlled by a less intelligent thing? There are very few examples. There's a mother and baby. Evolution put a lot of work into allowing the baby to control the mother, but that's about the only example I know of."

Hinton, who was awarded the Nobel Prize in physics this year for his research into machine learning and AI, left his job at Google last year, saying he wanted to be able to speak out more about the dangers of unregulated AI.

"Just leaving it to the profit motive of large companies is not going to be sufficient to make sure they develop it safely."

He has warned that AI chatbots could be used by authoritarian leaders to manipulate the public, and said last year that "the kind of intelligence we're developing is very different from the intelligence we have."

On Friday, Hinton said he is particularly worried that "the invisible hand" of the market will not keep humans safe from a technology that surpasses their intelligence, and called for strict regulations of AI.

"Just leaving it to the profit motive of large companies is not going to be sufficient to make sure they develop it safely," said Hinton.

More than 120 bills have been proposed in the U.S. Congress to regulate AI robocalls, the technology's role in national security, and other issues, while the Biden administration has taken some action to rein in AI development.

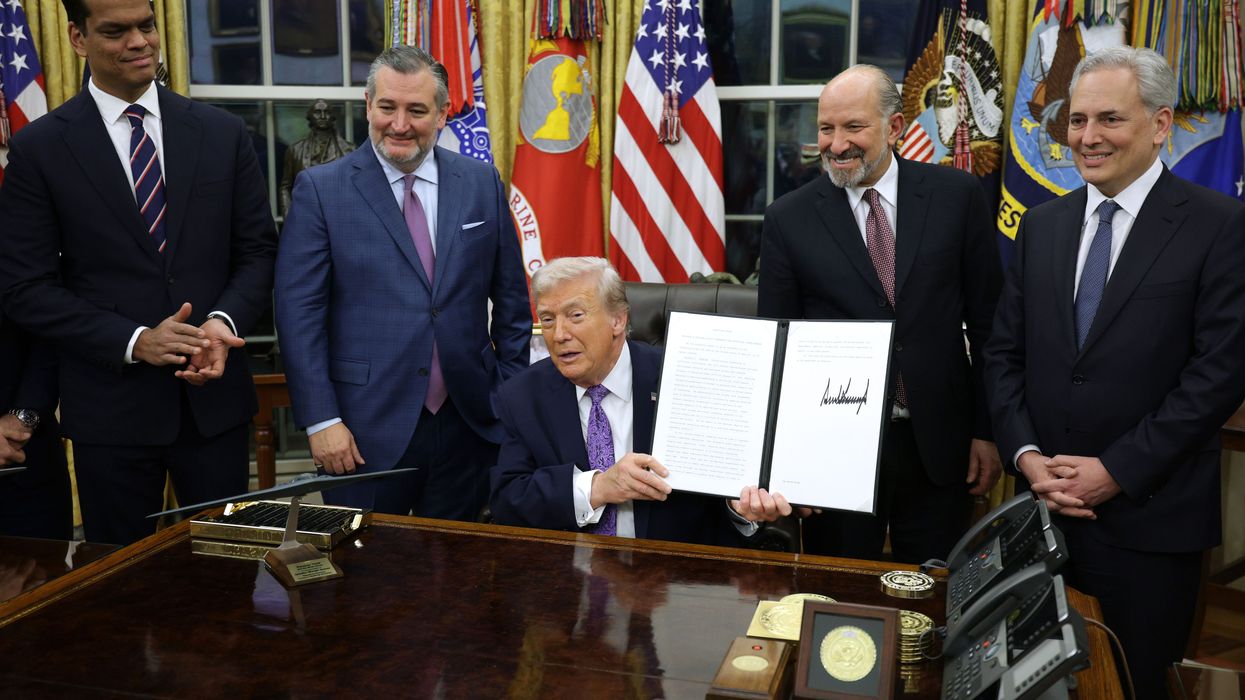

An executive order calling for "Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence" said that "harnessing AI for good and realizing its myriad benefits requires mitigating its substantial risks." President-elect Donald Trump is expected to rescind the order.

The White House Blueprint for an AI Bill of Rights calls for safe and effective systems, algorithmic discrimination protections, data privacy, notice and explanation when AI is used, and the ability to opt out of automated systems.

But the European Union's Artificial Intelligence Act was a deemed a "failure" by rights advocates this year, after industry lobbying helped ensure the law included numerous loopholes and exemptions for law enforcement and migration authorities.

"The only thing that can force those big companies to do more research on safety," said Hinton on Friday, "is government regulation."