Demonstrators rally in opposition to a plan by Elon Musks's xAI to use gas turbines for a new data center rally ahead of a public comment meeting on the project at Fairley High School in Memphis, Tennessee on April 25, 2025.

Green Energy Is Still a Better Bet Than AI

The buildout of lots and lots of power-gobbling data centers is not as inevitable as it appears.

Caveat—this post was written entirely with my own intelligence, so who knows. Maybe it’s wrong.

But the despairing question I get asked most often is: “What’s the use? However much clean energy we produce, AI data centers will simply soak it all up.” It’s too early in the course of this technology to know anything for sure, but there are a few important answers to that.

The first comes from Amory Lovins, the long-time energy guru who wrote a paper some months ago pointing out that energy demand from AI was highly speculative, an idea he based on… history:

In 1999, the US coal industry claimed that information technology would need half the nation’s electricity by 2020, so a strong economy required far more coal-fired power stations. Such claims were spectacularly wrong but widely believed, even by top officials. Hundreds of unneeded power plants were built, hurting investors. Despite that costly lesson, similar dynamics are now unfolding again.

As Debra Kahn pointed out in Politico a few weeks ago:

So far, data centers have only increased total US power demand by a tiny amount (they make up roughly 4.4 percent of electricity use, which rose 2 percent overall last year).

And it’s possible that even if AI expands as its proponents expect, it will grow steadily more efficient, meaning it would need much less energy than predicted. Lovins again:

For example, NVIDIA’s head of data center product marketing said in September 2024 that in the past decade, “we’ve seen the efficiency of doing inferences in certain language models has increased effectively by 100,000 times. Do we expect that to continue? I think so: There’s lots of room for optimization.” Another NVIDIA comment reckons to have made AI inference (across more models) 45,000× more efficient since 2016, and expects orders-of magnitude further gains. Indeed, in 2020, NVIDIA’s Ampere chips needed 150 joules of energy per inference; in 2022, their Hopper successors needed just 15; and in 2024, their Blackwell successors needed 24 but also quintupled performance, thus using 31× less energy than Ampere per unit of performance. (Such comparisons depend on complex and wideranging assumptions, creating big discrepancies, so another expert interprets the data as up to 25× less energy and 30× better performance, multiplying to 750×.)

But that doesn’t mean that the AI industry, and its utility and government partners, won’t try to build ever more generating capacity to supply whatever power needs they project may be coming. In some places they already are: Internet Alley in Virginia has more than 150 large centers, using a quarter of its power. This is becoming an intense political issue in the Old Dominion State. As Dave Weigel reported yesterday, the issue has begun to roil Virginia politics—the GOP candidate for governor sticks with her predecessor, Glenn Youngkin, in somehow blaming solar energy for rising electricity prices (“the sun goes down”), while the Democratic nominee, Abigail Spanberger, is trying to figure out a response:

Neither nominee has gone as far in curbing growth as many suburban DC legislators and activists want. They see some of the world’s wealthiest companies getting plugged into the grid without locals reaping the benefits. Some Virginia elections have turned into battles over which candidate will be toughest on data centers; others elections have already been lost over them.

“My advice to Abigail has been: Look at where the citizens of Virginia are on the data centers,” said state Sen. Danica Roem, a Democrat who represents part of Prince William County in DC’s growing suburbs. “There are a lot of people willing to be single-issue, split-ticket voters based on this.”

Indeed, it’s shaping up to be the mother of all political issues as the midterms loom—pretty much everyone pays electric rates, and under President Donald Trump they’re starting to skyrocket. The reason isn’t hard to figure out: He’s simultaneously accelerating demand with his support for data center buildout, and constricting supply by shutting down cheap solar and wind. In fact, one way of looking at AI is that it’s main use is as a vehicle to give the fossil fuel industry one last reason to expand.

If this sounds conspiratorial, consider this story from yesterday: John McCarrick, newly hired by industry colossus OpenAI to find energy sources for ChatGPT is:

an official from the first Trump administration who is a dedicated champion of natural gas.

John McCarrick, the company’s new head of Global Energy Policy, was a senior energy policy advisor in the first Trump administration’s Bureau of Energy Resources in the Department of State while under former Secretaries of State Rex Tillerson and Mike Pompeo.

As deputy assistant secretary for Energy Transformation and the special envoy for International Energy Affairs, McCarrick promoted exports of American liquefied natural gas to Europe in the wake of the Russian invasion of Ukraine, and advocated for Asian countries to invest in natural gas.

The choice to hire McCarrick matches the intentions of OpenAI’s Trump-dominating CEO Sam Altman, who said in a U.S. Senate hearing in May that “in the short term, I think [the future of powering AI] probably looks like more natural gas.”

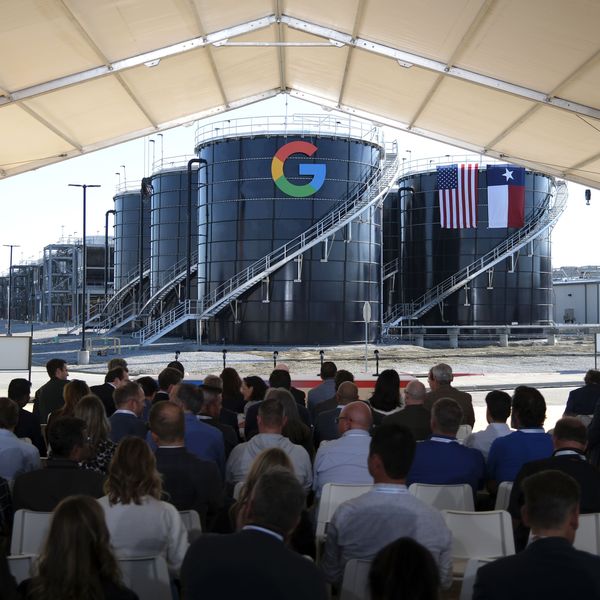

Sam Altman himself is an acolyte of Peter Thiel, famous climate denier who recently suggested Greta Thunberg might be the anti-Christ. But it’s all of them. In the rush to keep their valuations high, the big AI players are increasingly relying not just on fracked gas but on the very worst version of it. As Bloomberg reported early in the summer:

The trend has sparked an unlikely comeback for a type of gas turbine that long ago fell out of favor for being inefficient and polluting… a technology that’s largely been relegated to the sidelines of power production: small, single cycle natural gas turbines.

In fact, big suppliers are now companies like Caterpillar, not known for cutting edge turbine technology; these are small and comparatively dirty units.

(The ultimate example of this is Elon Musk’s Colossus supercomputer in Memphis, a superpolluter, which I wrote about for the New Yorker.) Oh, and it’s not just air pollution. A new threat emerged in the last few weeks, according to Tom Perkins in the Guardian:

Advocates are particularly concerned over the facilities’ use of Pfas gas, or f-gas, which can be potent greenhouse gases, and may mean datacenters’ climate impact is worse than previously thought. Other f-gases turn into a type of dangerous compound that is rapidly accumulating across the globe.

No testing for Pfas air or water pollution has yet been done, and companies are not required to report the volume of chemicals they use or discharge. But some environmental groups are starting to push for state legislation that would require more reporting.

Look, here’s one bottom line: If we actually had to build enormous networks of AI data centers, the obvious, cheap, and clean way to do it would be with lots of solar energy. It goes up fast. As an industry study found as long ago as December of 2024 (an eon in AI time):

Off-grid solar microgrids offer a fast path to power AI datacenters at enormous scale. The tech is mature, the suitable parcels of land in the US Southwest are known, and this solution is likely faster than most, if not all, alternatives.

As one of the country’s leading energy executives said in April:

“Renewables and battery storage are the lowest-cost form of power generation and capacity,” according to Next Era chief executive John Ketchum l. “We can build these projects and get new electrons on the grid in 12 to 18 months.”

But we can’t do that because the Trump administration has a corrupt ideological bias against clean energy, the latest example of which came last week when a giant Nevada solar project was cancelled. As Jael Holzman was the first to report:

Esmeralda 7 was supposed to produce a gargantuan 6.2 gigawatts of power–equal to nearly all the power supplied to southern Nevada by the state’s primary public utility. It would do so with a sprawling web of solar panels and batteries across the western Nevada desert. Backed by NextEra Energy, Invenergy, ConnectGen, and other renewables developers, the project was moving forward at a relatively smooth pace under the Biden administration.

But now it’s dead. One result will be higher prices for consumers. Despite everything the administration does, renewables are so cheap and easy that markets just keep choosing them. To beat that means policy as perverse as what we’re seeing—jury-rigging tired gas turbines and refitting ancient coal plants. All to power a technology that… seems increasingly like a bubble?

Here we need to get away from energy implications a bit, and just think about the underlying case for AI, and specifically the large language models that are the thing we’re spending so much money and power on. The AI industry is, increasingly, the American economy—it accounts for almost half of US economic growth this year, and an incredible 80% of the expansion of the stock market. As Ruchir Shirma wrote in the FT last week, the US economy is “one big bet” on AI:

The main reason AI is regarded as a magic fix for so many different threats is that it is expected to deliver a significant boost to productivity growth, especially in the US. Higher output per worker would lower the burden of debt by boosting GDP. It would reduce demand for labour, immigrant or domestic. And it would ease inflation risks, including the threat from tariffs, by enabling companies to raise wages without raising prices.

But for this happy picture to come to pass, AI has to actually work, which is to say do more than help kids cheat on their homework. And there’s been a growing sense in recent months that all is not right on that front. I’ve been following two AI skeptics for a year or so, both on Substack (where increasingly, in-depth and non-orthodox reporting goes to thrive).

The first is Gary Marcus, an AI researcher who has concluded that the large language models like Chat GPT are going down a blind alley. If you like to watch video, here is an encapsulation of his main points, published over the weekend. If you prefer that old-fashioned technology of reading (call me a Luddite, but it seems faster and more efficient, and much easier to excerpt), here’s his recent account from the Times explaining why businesses are having trouble finding reasons to pay money for this technology:

Large language models have had their uses, especially for coding, writing, and brainstorming, in which humans are still directly involved. But no matter how large we have made them, they have never been worthy of our trust.

Indeed, an MIT study this year found that 95% of businesses reported no measurable increase in productivity from using AI; the Harvard Business Review, a couple of weeks ago, said AI "'workslop' was cratering productivity.”

And what that means, in turn, is that there’s no real way to imagine recovering the hundreds of billions and trillions that are currently being invested in the technology. The keeper of the spreadsheets is the other Substacker, Ed Zitron, who writes extremely long and increasingly exasperated essays looking at the financial lunacy of these “investments” which, remember, underpin the stock market at the moment. Here’s last week’s:

In fact, let me put it a little simpler: All of those data center deals you’ve seen announced are basically bullshit. Even if they get the permits and the money, there are massive physical challenges that cannot be resolved by simply throwing money at them.

Today I’m going to tell you a story of chaos, hubris and fantastical thinking. I want you to come away from this with a full picture of how ridiculous the promises are, and that’s before you get to the cold hard reality that AI fucking sucks.

I’m not pretending this is the final word on this subject. No one knows how it’s all going to work out, but my guess is: badly. Already it’s sending electricity prices soaring and increasing fossil fuel emissions.

But maybe it’s also running other kinds of walls that will eventually reduce demand. Maybe human beings will decide to be… human. The new Sora “service” launched by OpenAI that allows your AI to generate fake videos, for instance, threatens to undermine the entire business of looking at videos because… what’s the point? If you can’t tell if the guy eating a ridiculously hot chili pepper is real or not, why would you watch? In a broader sense, as John Burns-Murdoch wrote in the FT (and again how lucky Europe is to have a reputable business newspaper), we may be reaching “peak social media":

It has gone largely unnoticed that time spent on social media peaked in 2022 and has since gone into steady decline, according to an analysis of the online habits of 250,000 adults in more than 50 countries carried out for the FT by the digital audience insights company GWI.

And this is not just the unwinding of a bump in screen time during pandemic lockdowns—usage has traced a smooth curve up and down over the past decade-plus. Across the developed world, adults aged 16 and older spent an average of two hours and 20 minutes per day on social platforms at the end of 2024, down by almost 10 per cent since 2022. Notably, the decline is most pronounced among the erstwhile heaviest users—teens and 20-somethings.

Which is to say: Perhaps at some point we’ll begin to come to our senses and start using our brains and bodies for the things they were built for: contact with each other, and with the world around us. That’s a lot to ask, but the world can turn in good directions as well as bad. As a final word, there’s this last week from Pope Leo, speaking to a bunch of news executives around the world, and imploring them to cool it with the junk they’re putting out:

Communication must be freed from the misguided thinking that corrupts it, from unfair competition, and the degrading practice of so-called clickbait.

Stay tuned. This story will have a lot to do with how the world turns out.

An Urgent Message From Our Co-Founder

Dear Common Dreams reader, The U.S. is on a fast track to authoritarianism like nothing I've ever seen. Meanwhile, corporate news outlets are utterly capitulating to Trump, twisting their coverage to avoid drawing his ire while lining up to stuff cash in his pockets. That's why I believe that Common Dreams is doing the best and most consequential reporting that we've ever done. Our small but mighty team is a progressive reporting powerhouse, covering the news every day that the corporate media never will. Our mission has always been simple: To inform. To inspire. And to ignite change for the common good. Now here's the key piece that I want all our readers to understand: None of this would be possible without your financial support. That's not just some fundraising cliche. It's the absolute and literal truth. We don't accept corporate advertising and never will. We don't have a paywall because we don't think people should be blocked from critical news based on their ability to pay. Everything we do is funded by the donations of readers like you. Will you donate now to help power the nonprofit, independent reporting of Common Dreams? Thank you for being a vital member of our community. Together, we can keep independent journalism alive when it’s needed most. - Craig Brown, Co-founder |

Caveat—this post was written entirely with my own intelligence, so who knows. Maybe it’s wrong.

But the despairing question I get asked most often is: “What’s the use? However much clean energy we produce, AI data centers will simply soak it all up.” It’s too early in the course of this technology to know anything for sure, but there are a few important answers to that.

The first comes from Amory Lovins, the long-time energy guru who wrote a paper some months ago pointing out that energy demand from AI was highly speculative, an idea he based on… history:

In 1999, the US coal industry claimed that information technology would need half the nation’s electricity by 2020, so a strong economy required far more coal-fired power stations. Such claims were spectacularly wrong but widely believed, even by top officials. Hundreds of unneeded power plants were built, hurting investors. Despite that costly lesson, similar dynamics are now unfolding again.

As Debra Kahn pointed out in Politico a few weeks ago:

So far, data centers have only increased total US power demand by a tiny amount (they make up roughly 4.4 percent of electricity use, which rose 2 percent overall last year).

And it’s possible that even if AI expands as its proponents expect, it will grow steadily more efficient, meaning it would need much less energy than predicted. Lovins again:

For example, NVIDIA’s head of data center product marketing said in September 2024 that in the past decade, “we’ve seen the efficiency of doing inferences in certain language models has increased effectively by 100,000 times. Do we expect that to continue? I think so: There’s lots of room for optimization.” Another NVIDIA comment reckons to have made AI inference (across more models) 45,000× more efficient since 2016, and expects orders-of magnitude further gains. Indeed, in 2020, NVIDIA’s Ampere chips needed 150 joules of energy per inference; in 2022, their Hopper successors needed just 15; and in 2024, their Blackwell successors needed 24 but also quintupled performance, thus using 31× less energy than Ampere per unit of performance. (Such comparisons depend on complex and wideranging assumptions, creating big discrepancies, so another expert interprets the data as up to 25× less energy and 30× better performance, multiplying to 750×.)

But that doesn’t mean that the AI industry, and its utility and government partners, won’t try to build ever more generating capacity to supply whatever power needs they project may be coming. In some places they already are: Internet Alley in Virginia has more than 150 large centers, using a quarter of its power. This is becoming an intense political issue in the Old Dominion State. As Dave Weigel reported yesterday, the issue has begun to roil Virginia politics—the GOP candidate for governor sticks with her predecessor, Glenn Youngkin, in somehow blaming solar energy for rising electricity prices (“the sun goes down”), while the Democratic nominee, Abigail Spanberger, is trying to figure out a response:

Neither nominee has gone as far in curbing growth as many suburban DC legislators and activists want. They see some of the world’s wealthiest companies getting plugged into the grid without locals reaping the benefits. Some Virginia elections have turned into battles over which candidate will be toughest on data centers; others elections have already been lost over them.

“My advice to Abigail has been: Look at where the citizens of Virginia are on the data centers,” said state Sen. Danica Roem, a Democrat who represents part of Prince William County in DC’s growing suburbs. “There are a lot of people willing to be single-issue, split-ticket voters based on this.”

Indeed, it’s shaping up to be the mother of all political issues as the midterms loom—pretty much everyone pays electric rates, and under President Donald Trump they’re starting to skyrocket. The reason isn’t hard to figure out: He’s simultaneously accelerating demand with his support for data center buildout, and constricting supply by shutting down cheap solar and wind. In fact, one way of looking at AI is that it’s main use is as a vehicle to give the fossil fuel industry one last reason to expand.

If this sounds conspiratorial, consider this story from yesterday: John McCarrick, newly hired by industry colossus OpenAI to find energy sources for ChatGPT is:

an official from the first Trump administration who is a dedicated champion of natural gas.

John McCarrick, the company’s new head of Global Energy Policy, was a senior energy policy advisor in the first Trump administration’s Bureau of Energy Resources in the Department of State while under former Secretaries of State Rex Tillerson and Mike Pompeo.

As deputy assistant secretary for Energy Transformation and the special envoy for International Energy Affairs, McCarrick promoted exports of American liquefied natural gas to Europe in the wake of the Russian invasion of Ukraine, and advocated for Asian countries to invest in natural gas.

The choice to hire McCarrick matches the intentions of OpenAI’s Trump-dominating CEO Sam Altman, who said in a U.S. Senate hearing in May that “in the short term, I think [the future of powering AI] probably looks like more natural gas.”

Sam Altman himself is an acolyte of Peter Thiel, famous climate denier who recently suggested Greta Thunberg might be the anti-Christ. But it’s all of them. In the rush to keep their valuations high, the big AI players are increasingly relying not just on fracked gas but on the very worst version of it. As Bloomberg reported early in the summer:

The trend has sparked an unlikely comeback for a type of gas turbine that long ago fell out of favor for being inefficient and polluting… a technology that’s largely been relegated to the sidelines of power production: small, single cycle natural gas turbines.

In fact, big suppliers are now companies like Caterpillar, not known for cutting edge turbine technology; these are small and comparatively dirty units.

(The ultimate example of this is Elon Musk’s Colossus supercomputer in Memphis, a superpolluter, which I wrote about for the New Yorker.) Oh, and it’s not just air pollution. A new threat emerged in the last few weeks, according to Tom Perkins in the Guardian:

Advocates are particularly concerned over the facilities’ use of Pfas gas, or f-gas, which can be potent greenhouse gases, and may mean datacenters’ climate impact is worse than previously thought. Other f-gases turn into a type of dangerous compound that is rapidly accumulating across the globe.

No testing for Pfas air or water pollution has yet been done, and companies are not required to report the volume of chemicals they use or discharge. But some environmental groups are starting to push for state legislation that would require more reporting.

Look, here’s one bottom line: If we actually had to build enormous networks of AI data centers, the obvious, cheap, and clean way to do it would be with lots of solar energy. It goes up fast. As an industry study found as long ago as December of 2024 (an eon in AI time):

Off-grid solar microgrids offer a fast path to power AI datacenters at enormous scale. The tech is mature, the suitable parcels of land in the US Southwest are known, and this solution is likely faster than most, if not all, alternatives.

As one of the country’s leading energy executives said in April:

“Renewables and battery storage are the lowest-cost form of power generation and capacity,” according to Next Era chief executive John Ketchum l. “We can build these projects and get new electrons on the grid in 12 to 18 months.”

But we can’t do that because the Trump administration has a corrupt ideological bias against clean energy, the latest example of which came last week when a giant Nevada solar project was cancelled. As Jael Holzman was the first to report:

Esmeralda 7 was supposed to produce a gargantuan 6.2 gigawatts of power–equal to nearly all the power supplied to southern Nevada by the state’s primary public utility. It would do so with a sprawling web of solar panels and batteries across the western Nevada desert. Backed by NextEra Energy, Invenergy, ConnectGen, and other renewables developers, the project was moving forward at a relatively smooth pace under the Biden administration.

But now it’s dead. One result will be higher prices for consumers. Despite everything the administration does, renewables are so cheap and easy that markets just keep choosing them. To beat that means policy as perverse as what we’re seeing—jury-rigging tired gas turbines and refitting ancient coal plants. All to power a technology that… seems increasingly like a bubble?

Here we need to get away from energy implications a bit, and just think about the underlying case for AI, and specifically the large language models that are the thing we’re spending so much money and power on. The AI industry is, increasingly, the American economy—it accounts for almost half of US economic growth this year, and an incredible 80% of the expansion of the stock market. As Ruchir Shirma wrote in the FT last week, the US economy is “one big bet” on AI:

The main reason AI is regarded as a magic fix for so many different threats is that it is expected to deliver a significant boost to productivity growth, especially in the US. Higher output per worker would lower the burden of debt by boosting GDP. It would reduce demand for labour, immigrant or domestic. And it would ease inflation risks, including the threat from tariffs, by enabling companies to raise wages without raising prices.

But for this happy picture to come to pass, AI has to actually work, which is to say do more than help kids cheat on their homework. And there’s been a growing sense in recent months that all is not right on that front. I’ve been following two AI skeptics for a year or so, both on Substack (where increasingly, in-depth and non-orthodox reporting goes to thrive).

The first is Gary Marcus, an AI researcher who has concluded that the large language models like Chat GPT are going down a blind alley. If you like to watch video, here is an encapsulation of his main points, published over the weekend. If you prefer that old-fashioned technology of reading (call me a Luddite, but it seems faster and more efficient, and much easier to excerpt), here’s his recent account from the Times explaining why businesses are having trouble finding reasons to pay money for this technology:

Large language models have had their uses, especially for coding, writing, and brainstorming, in which humans are still directly involved. But no matter how large we have made them, they have never been worthy of our trust.

Indeed, an MIT study this year found that 95% of businesses reported no measurable increase in productivity from using AI; the Harvard Business Review, a couple of weeks ago, said AI "'workslop' was cratering productivity.”

And what that means, in turn, is that there’s no real way to imagine recovering the hundreds of billions and trillions that are currently being invested in the technology. The keeper of the spreadsheets is the other Substacker, Ed Zitron, who writes extremely long and increasingly exasperated essays looking at the financial lunacy of these “investments” which, remember, underpin the stock market at the moment. Here’s last week’s:

In fact, let me put it a little simpler: All of those data center deals you’ve seen announced are basically bullshit. Even if they get the permits and the money, there are massive physical challenges that cannot be resolved by simply throwing money at them.

Today I’m going to tell you a story of chaos, hubris and fantastical thinking. I want you to come away from this with a full picture of how ridiculous the promises are, and that’s before you get to the cold hard reality that AI fucking sucks.

I’m not pretending this is the final word on this subject. No one knows how it’s all going to work out, but my guess is: badly. Already it’s sending electricity prices soaring and increasing fossil fuel emissions.

But maybe it’s also running other kinds of walls that will eventually reduce demand. Maybe human beings will decide to be… human. The new Sora “service” launched by OpenAI that allows your AI to generate fake videos, for instance, threatens to undermine the entire business of looking at videos because… what’s the point? If you can’t tell if the guy eating a ridiculously hot chili pepper is real or not, why would you watch? In a broader sense, as John Burns-Murdoch wrote in the FT (and again how lucky Europe is to have a reputable business newspaper), we may be reaching “peak social media":

It has gone largely unnoticed that time spent on social media peaked in 2022 and has since gone into steady decline, according to an analysis of the online habits of 250,000 adults in more than 50 countries carried out for the FT by the digital audience insights company GWI.

And this is not just the unwinding of a bump in screen time during pandemic lockdowns—usage has traced a smooth curve up and down over the past decade-plus. Across the developed world, adults aged 16 and older spent an average of two hours and 20 minutes per day on social platforms at the end of 2024, down by almost 10 per cent since 2022. Notably, the decline is most pronounced among the erstwhile heaviest users—teens and 20-somethings.

Which is to say: Perhaps at some point we’ll begin to come to our senses and start using our brains and bodies for the things they were built for: contact with each other, and with the world around us. That’s a lot to ask, but the world can turn in good directions as well as bad. As a final word, there’s this last week from Pope Leo, speaking to a bunch of news executives around the world, and imploring them to cool it with the junk they’re putting out:

Communication must be freed from the misguided thinking that corrupts it, from unfair competition, and the degrading practice of so-called clickbait.

Stay tuned. This story will have a lot to do with how the world turns out.

- Nationwide Backlash Brewing Against Big Tech's Energy-Devouring AI Data Centers ›

- UN Chief Says Humanity Must 'Harness the Power of AI for Good' ›

- Trump's War on Clean Energy Could Be His Downfall... If the Dems Exploit It ›

- How to Make Sure People and the Planet Don’t Pay for the Data Center Buildout ›

- Artificial Intelligence Is on a Collision Course With the Green Transition ›

Caveat—this post was written entirely with my own intelligence, so who knows. Maybe it’s wrong.

But the despairing question I get asked most often is: “What’s the use? However much clean energy we produce, AI data centers will simply soak it all up.” It’s too early in the course of this technology to know anything for sure, but there are a few important answers to that.

The first comes from Amory Lovins, the long-time energy guru who wrote a paper some months ago pointing out that energy demand from AI was highly speculative, an idea he based on… history:

In 1999, the US coal industry claimed that information technology would need half the nation’s electricity by 2020, so a strong economy required far more coal-fired power stations. Such claims were spectacularly wrong but widely believed, even by top officials. Hundreds of unneeded power plants were built, hurting investors. Despite that costly lesson, similar dynamics are now unfolding again.

As Debra Kahn pointed out in Politico a few weeks ago:

So far, data centers have only increased total US power demand by a tiny amount (they make up roughly 4.4 percent of electricity use, which rose 2 percent overall last year).

And it’s possible that even if AI expands as its proponents expect, it will grow steadily more efficient, meaning it would need much less energy than predicted. Lovins again:

For example, NVIDIA’s head of data center product marketing said in September 2024 that in the past decade, “we’ve seen the efficiency of doing inferences in certain language models has increased effectively by 100,000 times. Do we expect that to continue? I think so: There’s lots of room for optimization.” Another NVIDIA comment reckons to have made AI inference (across more models) 45,000× more efficient since 2016, and expects orders-of magnitude further gains. Indeed, in 2020, NVIDIA’s Ampere chips needed 150 joules of energy per inference; in 2022, their Hopper successors needed just 15; and in 2024, their Blackwell successors needed 24 but also quintupled performance, thus using 31× less energy than Ampere per unit of performance. (Such comparisons depend on complex and wideranging assumptions, creating big discrepancies, so another expert interprets the data as up to 25× less energy and 30× better performance, multiplying to 750×.)

But that doesn’t mean that the AI industry, and its utility and government partners, won’t try to build ever more generating capacity to supply whatever power needs they project may be coming. In some places they already are: Internet Alley in Virginia has more than 150 large centers, using a quarter of its power. This is becoming an intense political issue in the Old Dominion State. As Dave Weigel reported yesterday, the issue has begun to roil Virginia politics—the GOP candidate for governor sticks with her predecessor, Glenn Youngkin, in somehow blaming solar energy for rising electricity prices (“the sun goes down”), while the Democratic nominee, Abigail Spanberger, is trying to figure out a response:

Neither nominee has gone as far in curbing growth as many suburban DC legislators and activists want. They see some of the world’s wealthiest companies getting plugged into the grid without locals reaping the benefits. Some Virginia elections have turned into battles over which candidate will be toughest on data centers; others elections have already been lost over them.

“My advice to Abigail has been: Look at where the citizens of Virginia are on the data centers,” said state Sen. Danica Roem, a Democrat who represents part of Prince William County in DC’s growing suburbs. “There are a lot of people willing to be single-issue, split-ticket voters based on this.”

Indeed, it’s shaping up to be the mother of all political issues as the midterms loom—pretty much everyone pays electric rates, and under President Donald Trump they’re starting to skyrocket. The reason isn’t hard to figure out: He’s simultaneously accelerating demand with his support for data center buildout, and constricting supply by shutting down cheap solar and wind. In fact, one way of looking at AI is that it’s main use is as a vehicle to give the fossil fuel industry one last reason to expand.

If this sounds conspiratorial, consider this story from yesterday: John McCarrick, newly hired by industry colossus OpenAI to find energy sources for ChatGPT is:

an official from the first Trump administration who is a dedicated champion of natural gas.

John McCarrick, the company’s new head of Global Energy Policy, was a senior energy policy advisor in the first Trump administration’s Bureau of Energy Resources in the Department of State while under former Secretaries of State Rex Tillerson and Mike Pompeo.

As deputy assistant secretary for Energy Transformation and the special envoy for International Energy Affairs, McCarrick promoted exports of American liquefied natural gas to Europe in the wake of the Russian invasion of Ukraine, and advocated for Asian countries to invest in natural gas.

The choice to hire McCarrick matches the intentions of OpenAI’s Trump-dominating CEO Sam Altman, who said in a U.S. Senate hearing in May that “in the short term, I think [the future of powering AI] probably looks like more natural gas.”

Sam Altman himself is an acolyte of Peter Thiel, famous climate denier who recently suggested Greta Thunberg might be the anti-Christ. But it’s all of them. In the rush to keep their valuations high, the big AI players are increasingly relying not just on fracked gas but on the very worst version of it. As Bloomberg reported early in the summer:

The trend has sparked an unlikely comeback for a type of gas turbine that long ago fell out of favor for being inefficient and polluting… a technology that’s largely been relegated to the sidelines of power production: small, single cycle natural gas turbines.

In fact, big suppliers are now companies like Caterpillar, not known for cutting edge turbine technology; these are small and comparatively dirty units.

(The ultimate example of this is Elon Musk’s Colossus supercomputer in Memphis, a superpolluter, which I wrote about for the New Yorker.) Oh, and it’s not just air pollution. A new threat emerged in the last few weeks, according to Tom Perkins in the Guardian:

Advocates are particularly concerned over the facilities’ use of Pfas gas, or f-gas, which can be potent greenhouse gases, and may mean datacenters’ climate impact is worse than previously thought. Other f-gases turn into a type of dangerous compound that is rapidly accumulating across the globe.

No testing for Pfas air or water pollution has yet been done, and companies are not required to report the volume of chemicals they use or discharge. But some environmental groups are starting to push for state legislation that would require more reporting.

Look, here’s one bottom line: If we actually had to build enormous networks of AI data centers, the obvious, cheap, and clean way to do it would be with lots of solar energy. It goes up fast. As an industry study found as long ago as December of 2024 (an eon in AI time):

Off-grid solar microgrids offer a fast path to power AI datacenters at enormous scale. The tech is mature, the suitable parcels of land in the US Southwest are known, and this solution is likely faster than most, if not all, alternatives.

As one of the country’s leading energy executives said in April:

“Renewables and battery storage are the lowest-cost form of power generation and capacity,” according to Next Era chief executive John Ketchum l. “We can build these projects and get new electrons on the grid in 12 to 18 months.”

But we can’t do that because the Trump administration has a corrupt ideological bias against clean energy, the latest example of which came last week when a giant Nevada solar project was cancelled. As Jael Holzman was the first to report:

Esmeralda 7 was supposed to produce a gargantuan 6.2 gigawatts of power–equal to nearly all the power supplied to southern Nevada by the state’s primary public utility. It would do so with a sprawling web of solar panels and batteries across the western Nevada desert. Backed by NextEra Energy, Invenergy, ConnectGen, and other renewables developers, the project was moving forward at a relatively smooth pace under the Biden administration.

But now it’s dead. One result will be higher prices for consumers. Despite everything the administration does, renewables are so cheap and easy that markets just keep choosing them. To beat that means policy as perverse as what we’re seeing—jury-rigging tired gas turbines and refitting ancient coal plants. All to power a technology that… seems increasingly like a bubble?

Here we need to get away from energy implications a bit, and just think about the underlying case for AI, and specifically the large language models that are the thing we’re spending so much money and power on. The AI industry is, increasingly, the American economy—it accounts for almost half of US economic growth this year, and an incredible 80% of the expansion of the stock market. As Ruchir Shirma wrote in the FT last week, the US economy is “one big bet” on AI:

The main reason AI is regarded as a magic fix for so many different threats is that it is expected to deliver a significant boost to productivity growth, especially in the US. Higher output per worker would lower the burden of debt by boosting GDP. It would reduce demand for labour, immigrant or domestic. And it would ease inflation risks, including the threat from tariffs, by enabling companies to raise wages without raising prices.

But for this happy picture to come to pass, AI has to actually work, which is to say do more than help kids cheat on their homework. And there’s been a growing sense in recent months that all is not right on that front. I’ve been following two AI skeptics for a year or so, both on Substack (where increasingly, in-depth and non-orthodox reporting goes to thrive).

The first is Gary Marcus, an AI researcher who has concluded that the large language models like Chat GPT are going down a blind alley. If you like to watch video, here is an encapsulation of his main points, published over the weekend. If you prefer that old-fashioned technology of reading (call me a Luddite, but it seems faster and more efficient, and much easier to excerpt), here’s his recent account from the Times explaining why businesses are having trouble finding reasons to pay money for this technology:

Large language models have had their uses, especially for coding, writing, and brainstorming, in which humans are still directly involved. But no matter how large we have made them, they have never been worthy of our trust.

Indeed, an MIT study this year found that 95% of businesses reported no measurable increase in productivity from using AI; the Harvard Business Review, a couple of weeks ago, said AI "'workslop' was cratering productivity.”

And what that means, in turn, is that there’s no real way to imagine recovering the hundreds of billions and trillions that are currently being invested in the technology. The keeper of the spreadsheets is the other Substacker, Ed Zitron, who writes extremely long and increasingly exasperated essays looking at the financial lunacy of these “investments” which, remember, underpin the stock market at the moment. Here’s last week’s:

In fact, let me put it a little simpler: All of those data center deals you’ve seen announced are basically bullshit. Even if they get the permits and the money, there are massive physical challenges that cannot be resolved by simply throwing money at them.

Today I’m going to tell you a story of chaos, hubris and fantastical thinking. I want you to come away from this with a full picture of how ridiculous the promises are, and that’s before you get to the cold hard reality that AI fucking sucks.

I’m not pretending this is the final word on this subject. No one knows how it’s all going to work out, but my guess is: badly. Already it’s sending electricity prices soaring and increasing fossil fuel emissions.

But maybe it’s also running other kinds of walls that will eventually reduce demand. Maybe human beings will decide to be… human. The new Sora “service” launched by OpenAI that allows your AI to generate fake videos, for instance, threatens to undermine the entire business of looking at videos because… what’s the point? If you can’t tell if the guy eating a ridiculously hot chili pepper is real or not, why would you watch? In a broader sense, as John Burns-Murdoch wrote in the FT (and again how lucky Europe is to have a reputable business newspaper), we may be reaching “peak social media":

It has gone largely unnoticed that time spent on social media peaked in 2022 and has since gone into steady decline, according to an analysis of the online habits of 250,000 adults in more than 50 countries carried out for the FT by the digital audience insights company GWI.

And this is not just the unwinding of a bump in screen time during pandemic lockdowns—usage has traced a smooth curve up and down over the past decade-plus. Across the developed world, adults aged 16 and older spent an average of two hours and 20 minutes per day on social platforms at the end of 2024, down by almost 10 per cent since 2022. Notably, the decline is most pronounced among the erstwhile heaviest users—teens and 20-somethings.

Which is to say: Perhaps at some point we’ll begin to come to our senses and start using our brains and bodies for the things they were built for: contact with each other, and with the world around us. That’s a lot to ask, but the world can turn in good directions as well as bad. As a final word, there’s this last week from Pope Leo, speaking to a bunch of news executives around the world, and imploring them to cool it with the junk they’re putting out:

Communication must be freed from the misguided thinking that corrupts it, from unfair competition, and the degrading practice of so-called clickbait.

Stay tuned. This story will have a lot to do with how the world turns out.

- Nationwide Backlash Brewing Against Big Tech's Energy-Devouring AI Data Centers ›

- UN Chief Says Humanity Must 'Harness the Power of AI for Good' ›

- Trump's War on Clean Energy Could Be His Downfall... If the Dems Exploit It ›

- How to Make Sure People and the Planet Don’t Pay for the Data Center Buildout ›

- Artificial Intelligence Is on a Collision Course With the Green Transition ›