The pro-Democratic super PAC, called Making Our Tomorrow, will work to influence congressional races in Illinois, while the pro-GOP PAC, called Forge the Future Project, will be focusing on congressional races in Texas.

The Times noted that Meta has in the past been "cautious about campaign engagements, making small donations out of a corporate political action committee and contributing to presidential inaugurations," but it has decided to ramp up its spending to defend its AI business from governmental interference.

Meta's spending splurge to elect pro-AI candidates is just one of many efforts by the AI industry to ensure a friendly regulatory environment.

CNN reported last week that Leading the Future—a super PAC backed by venture capital firm Andreessen Horowitz, Palantir co-founder Joe Lonsdale, and other AI heavyweights—is pledging to spend at least $100 million to influence the 2026 midterm election.

The goal of the PAC will be to elect lawmakers who will pass legislation to set a single set of AI regulations that will take effect throughout the US, overriding any restrictions placed on the technology by state governments.

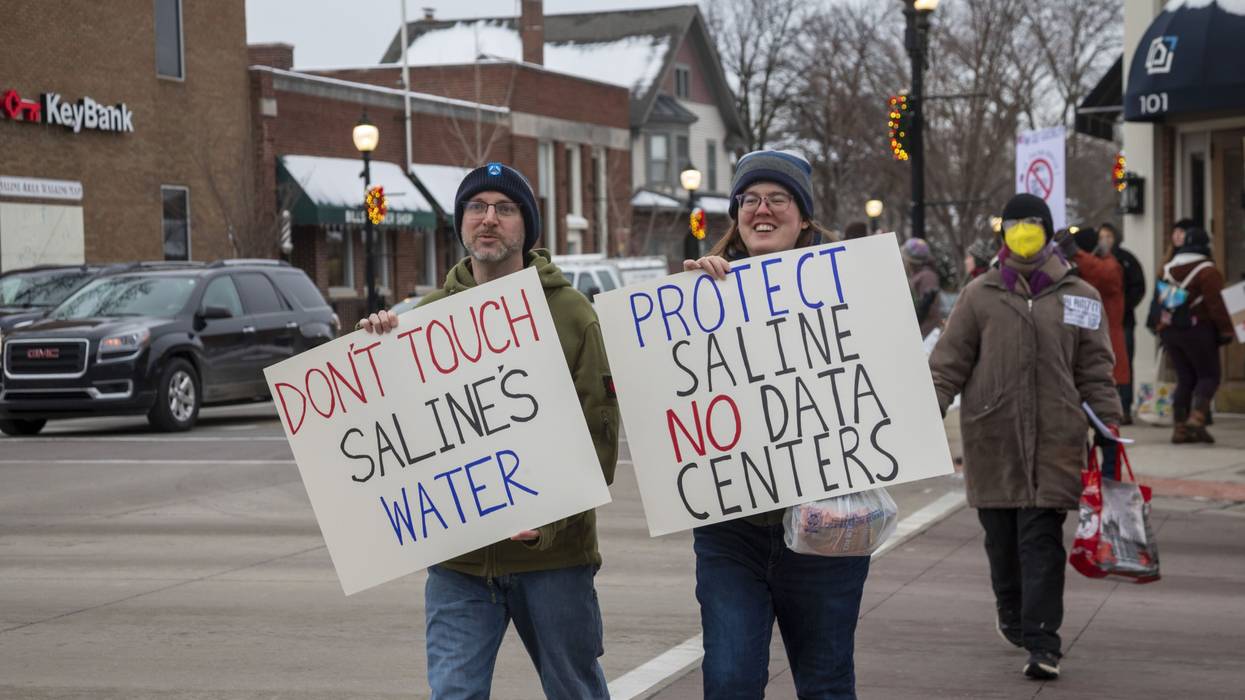

The PACs' big spending comes as a nationwide backlash to Big Tech has been forming across the US, as many communities are fighting against the construction of energy-devouring AI data centers that are raising electricity prices and have been accused of degrading the quality of local water supplies.

Reed Showalter, a Democratic US House of Representatives candidate running in Illinois' 7th Congressional District, said the report of Meta's big spending showed the importance of ensuring that voters elect leaders who will hold the major tech companies accountable.

"We deserve representatives who are going to take an honest look at AI and regulate it accordingly," he wrote in a social media post. "We can't afford more corrupt politicians bought by Big Tech."

Democratic New York congressional candidate Alex Bores, who is running on a platform of regulating AI, said during an interview with CNN on Wednesday that the tech companies' actions show they are "terrified" of being held accountable by elected officials.

He also noted that being attacked by the Leading the Future super PAC has ironically helped his candidacy.

"The fact that they're being so aggressive with it, I think, has been redounding to my benefit," he told host Dana Bash. "I've had a lot of constituents who have reached out and said, 'I hadn't even heard of you until all these text messages [from the AI super PAC]."

Watchdog social media account @OilPACTracker predicted that Meta's major political spending could turn into a liability if voters are made aware of its machinations.

"We would make sure the electorate knows about it," the watchdog wrote. "Big Tech money is toxic."