SUBSCRIBE TO OUR FREE NEWSLETTER

Daily news & progressive opinion—funded by the people, not the corporations—delivered straight to your inbox.

5

#000000

#FFFFFF

To donate by check, phone, or other method, see our More Ways to Give page.

Daily news & progressive opinion—funded by the people, not the corporations—delivered straight to your inbox.

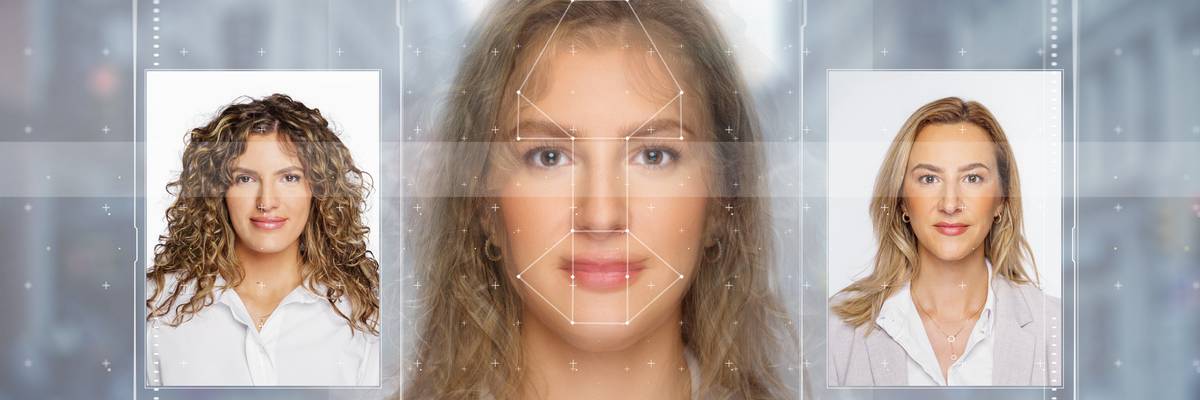

AI technology is seen changing the appearance of a woman.

"It's time for the FCC to protect voters from deepfakes," said one advocate.

A week after the Federal Elections Commission announced it would not take action to regulate artificial intelligence-generated "deepfakes" in political ads, more than 40 civil society groups on Thursday called on the Federal Communications Commission to step in to ensure U.S. voters will be informed about fake content used by campaigns as they prepare to go to the polls.

The groups, including Public Citizen, the AFL-CIO, Access Now, and the Campaign Legal Center, backed a proposal by the FCC to require on-air and written disclosures when there is AI-generated content in political ads.

"It's time for the FCC to protect voters from deepfakes!" said Willmary Escoto, policy counsel for Access Now.

Unveiled in May by FCC Chair Jessica Rosenworcel, the FCC's proposal would apply the disclosure rules to ads pertaining to candidates and issues and push for a "specific definition of AI-generated content."

"These rules are essential to safeguard the integrity of our democratic processes and ensure that voters are fully informed of the origins of political advertisements."

The civil society groups expressed their "strong support" for rules requiring "transparency in the use of AI-generated content in political advertisements on TV and radio, especially when the AI-generated content falsely depicts a candidate or persons saying or doing something that they never did with the intent to cause harm or deceive voters (known as 'deepfakes')."

"These rules are essential to safeguard the integrity of our democratic processes and ensure that voters are fully informed of the origins of political advertisements," wrote the groups.

Public Citizen condemned the Federal Election Commission last week when its Republican chair, Sean Cooksey, said the agency should "study how AI is actually used on the ground before considering any new rules."

The groups on Thursday said evidence already "abounds of the significant and deceptive impact that AI-generated content can have," with X owner Elon Musk recently posting a deepfake video that showed a manipulated image of Democratic presidential candidate and Vice President Kamala Harris, making it seem like she was saying she was the "ultimate diversity hire."

"The proposed disclosure requirements are a natural and common-sense extension of the FCC's existing mandates to ensure transparency in broadcasting in general and in political advertising on radio and TV in particular," said the groups.

They also commended the FCC's leadership in addressing the "critical issue" of deepfakes.

Dear Common Dreams reader, The U.S. is on a fast track to authoritarianism like nothing I've ever seen. Meanwhile, corporate news outlets are utterly capitulating to Trump, twisting their coverage to avoid drawing his ire while lining up to stuff cash in his pockets. That's why I believe that Common Dreams is doing the best and most consequential reporting that we've ever done. Our small but mighty team is a progressive reporting powerhouse, covering the news every day that the corporate media never will. Our mission has always been simple: To inform. To inspire. And to ignite change for the common good. Now here's the key piece that I want all our readers to understand: None of this would be possible without your financial support. That's not just some fundraising cliche. It's the absolute and literal truth. We don't accept corporate advertising and never will. We don't have a paywall because we don't think people should be blocked from critical news based on their ability to pay. Everything we do is funded by the donations of readers like you. Will you donate now to help power the nonprofit, independent reporting of Common Dreams? Thank you for being a vital member of our community. Together, we can keep independent journalism alive when it’s needed most. - Craig Brown, Co-founder |

A week after the Federal Elections Commission announced it would not take action to regulate artificial intelligence-generated "deepfakes" in political ads, more than 40 civil society groups on Thursday called on the Federal Communications Commission to step in to ensure U.S. voters will be informed about fake content used by campaigns as they prepare to go to the polls.

The groups, including Public Citizen, the AFL-CIO, Access Now, and the Campaign Legal Center, backed a proposal by the FCC to require on-air and written disclosures when there is AI-generated content in political ads.

"It's time for the FCC to protect voters from deepfakes!" said Willmary Escoto, policy counsel for Access Now.

Unveiled in May by FCC Chair Jessica Rosenworcel, the FCC's proposal would apply the disclosure rules to ads pertaining to candidates and issues and push for a "specific definition of AI-generated content."

"These rules are essential to safeguard the integrity of our democratic processes and ensure that voters are fully informed of the origins of political advertisements."

The civil society groups expressed their "strong support" for rules requiring "transparency in the use of AI-generated content in political advertisements on TV and radio, especially when the AI-generated content falsely depicts a candidate or persons saying or doing something that they never did with the intent to cause harm or deceive voters (known as 'deepfakes')."

"These rules are essential to safeguard the integrity of our democratic processes and ensure that voters are fully informed of the origins of political advertisements," wrote the groups.

Public Citizen condemned the Federal Election Commission last week when its Republican chair, Sean Cooksey, said the agency should "study how AI is actually used on the ground before considering any new rules."

The groups on Thursday said evidence already "abounds of the significant and deceptive impact that AI-generated content can have," with X owner Elon Musk recently posting a deepfake video that showed a manipulated image of Democratic presidential candidate and Vice President Kamala Harris, making it seem like she was saying she was the "ultimate diversity hire."

"The proposed disclosure requirements are a natural and common-sense extension of the FCC's existing mandates to ensure transparency in broadcasting in general and in political advertising on radio and TV in particular," said the groups.

They also commended the FCC's leadership in addressing the "critical issue" of deepfakes.

A week after the Federal Elections Commission announced it would not take action to regulate artificial intelligence-generated "deepfakes" in political ads, more than 40 civil society groups on Thursday called on the Federal Communications Commission to step in to ensure U.S. voters will be informed about fake content used by campaigns as they prepare to go to the polls.

The groups, including Public Citizen, the AFL-CIO, Access Now, and the Campaign Legal Center, backed a proposal by the FCC to require on-air and written disclosures when there is AI-generated content in political ads.

"It's time for the FCC to protect voters from deepfakes!" said Willmary Escoto, policy counsel for Access Now.

Unveiled in May by FCC Chair Jessica Rosenworcel, the FCC's proposal would apply the disclosure rules to ads pertaining to candidates and issues and push for a "specific definition of AI-generated content."

"These rules are essential to safeguard the integrity of our democratic processes and ensure that voters are fully informed of the origins of political advertisements."

The civil society groups expressed their "strong support" for rules requiring "transparency in the use of AI-generated content in political advertisements on TV and radio, especially when the AI-generated content falsely depicts a candidate or persons saying or doing something that they never did with the intent to cause harm or deceive voters (known as 'deepfakes')."

"These rules are essential to safeguard the integrity of our democratic processes and ensure that voters are fully informed of the origins of political advertisements," wrote the groups.

Public Citizen condemned the Federal Election Commission last week when its Republican chair, Sean Cooksey, said the agency should "study how AI is actually used on the ground before considering any new rules."

The groups on Thursday said evidence already "abounds of the significant and deceptive impact that AI-generated content can have," with X owner Elon Musk recently posting a deepfake video that showed a manipulated image of Democratic presidential candidate and Vice President Kamala Harris, making it seem like she was saying she was the "ultimate diversity hire."

"The proposed disclosure requirements are a natural and common-sense extension of the FCC's existing mandates to ensure transparency in broadcasting in general and in political advertising on radio and TV in particular," said the groups.

They also commended the FCC's leadership in addressing the "critical issue" of deepfakes.