SUBSCRIBE TO OUR FREE NEWSLETTER

Daily news & progressive opinion—funded by the people, not the corporations—delivered straight to your inbox.

5

#000000

#FFFFFF

To donate by check, phone, or other method, see our More Ways to Give page.

Daily news & progressive opinion—funded by the people, not the corporations—delivered straight to your inbox.

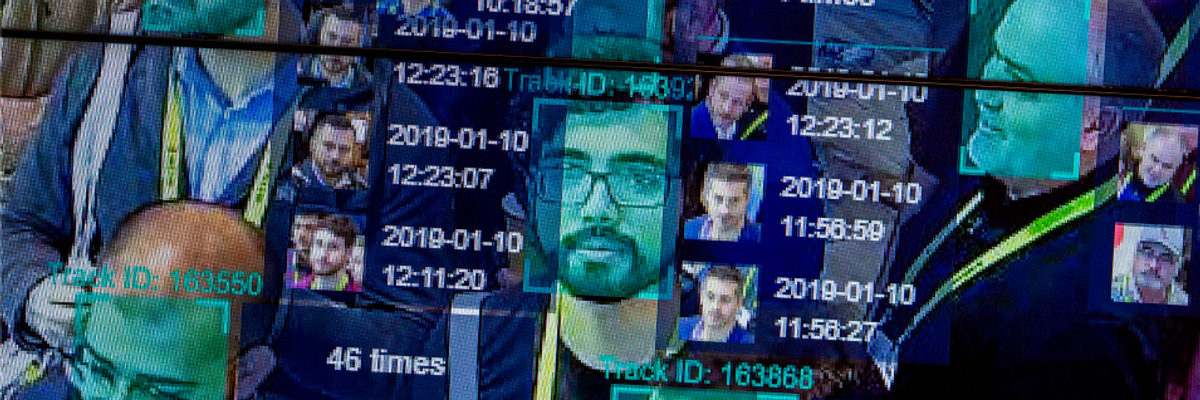

A live demonstration uses artificial intelligence and facial recognition in dense crowd spatial-temporal technology at the Horizon Robotics exhibit at the Las Vegas Convention Center during CES 2019 in Las Vegas on January 10, 2019. (Photo: David McNew/AFP via Getty Images)

The ACLU and law firm Edelson PC on Thursday filed a lawsuit in Illinois state court to end the "unlawful, privacy-destroying surveillance activities" of Clearview AI, a U.S.-based facial recognition technology startup that has contracted with hundreds of law enforcement agencies across the country.

"Companies like Clearview will end privacy as we know it, and must be stopped."

--Nathan Freed Wessler, ACLU

"Companies like Clearview will end privacy as we know it, and must be stopped," declared Nathan Freed Wessler, senior staff attorney with the ACLU's Speech, Privacy, and Technology Project.

"The ACLU is taking its fight to defend privacy rights against the growing threat of this unregulated surveillance technology to the courts," he said, "even as we double down on our work in legislatures and city councils nationwide."

The suit was filed in the Circuit Court of Cook County on behalf of the national ACLU, the ACLU of Illinois, the Chicago Alliance Against Sexual Exploitation, the Sex Workers Outreach Project, the Illinois State Public Interest Research Group (PIRG), and Mujeres Latinas en Accion. As Freed Wessler wrote in an ACLU blog post about the case:

Face recognition technology offers a surveillance capability unlike any other technology in the past. It makes it dangerously easy to identify and track us at protests, AA meetings, counseling sessions, political rallies, religious gatherings, and more. For our clients--organizations that serve survivors of domestic violence and sexual assault, undocumented immigrants, and people of color--this surveillance system is dangerous and even life-threatening. It empowers abusive ex-partners and serial harassers, exploitative companies, and ICE agents to track and target domestic violence and sexual assault survivors, undocumented immigrants, and other vulnerable communities.

The complaint accuses Clearview AI of violating Illinois residents' privacy rights under the state's Biometric Information Privacy Act (BIPA). The 2008 Illinois law requires companies to notify and obtain written consent from state residents before collecting or obtaining their biometric information or identifiers, which BIPA defines as "a retina or iris scan, fingerprint, voiceprint, or scan of hand or face geometry." Many activists and experts consider the law in Illinois to be the country's toughest state biometric privacy standard.

"Clearview is violating the privacy rights of Illinois residents at a staggering scale," said Rebecca Glenberg, senior staff counsel with the ACLU of Illinois. "Clearview's practices are exactly the kind of threat to privacy that the Illinois legislature intended to address through BIPA, and demonstrate why states across the country should adopt legal protections like the ones in Illinois."

Edelson PC is known nationally for consumer privacy litigation and filing the first cases under BIPA. Lead counsel Jay Edelson warned Thursday that "Clearview's actions represent one of the largest threats to personal privacy by a private company our country has faced. If a well-funded, politically connected company can simply amass information to track all of us, we are living in a different America."

Clearview AI, founded by Vietnamese-Australian serial entrepreneur Hoan Ton-That, has faced scrutiny since a January New York Times expose--entitled "The Secretive Company That Might End Privacy as We Know It"--detailed the startup's activities:

His tiny company, Clearview AI, devised a groundbreaking facial recognition app. You take a picture of a person, upload it, and get to see public photos of that person, along with links to where those photos appeared. The system--whose backbone is a database of more than three billion images that Clearview claims to have scraped from Facebook, YouTube, Venmo and millions of other websites--goes far beyond anything ever constructed by the United States government or Silicon Valley giants.

Federal and state law enforcement officers said that while they had only limited knowledge of how Clearview works and who is behind it, they had used its app to help solve shoplifting, identity theft, credit card fraud, murder, and child sexual exploitation cases.

Clearview AI told the Times that over 600 law enforcement agencies had used the company's controversial facial recognition technology in the past year but declined to provide a list. The Chicago Sun-Times noted Thursday in reporting on the new lawsuit that the Chicago Police Department is among those agencies.

Referencing the Times report in a statement announcing the suit Thursday, the ACLU said that "the company's actions embodied the nightmare scenario privacy advocates long warned of, and accomplished what many companies--such as Google--refused to try due to ethical concerns."

Representatives from groups named as plaintiffs in the case explained how the communities they support are particularly threatened by facial recognition tools. Kathryn Rosenfeld, a member of the Sex Workers Outreach Project-Chicago Leadership Board, said that "as members of a criminalized population and profession who additionally battle social stigma and economic marginalization, sex workers' ability to maintain anonymity is crucial to personal safety both on and off the job."

"Sex workers are already extremely vulnerable to violence and persecution by law enforcement, clientele, would-be economic exploiters, and members of the general public," Rosenfeld added. "The ability of these individuals to track and target us using facial recognition technology further threatens our community's ability to earn our livelihoods safely."

Linda Xochitl Tortolero is president and CEO of Mujeres Latinas en Accion, a nonprofit that provides services to survivors of domestic violence and sexual assault, and undocumented immigrants.

"For many Latinas and survivors, this technology isn't just unnerving, it's dangerous, even life-threatening," said Xochitl Tortolero. "It gives free rein to stalkers and abusive ex-partners, predatory companies, and ICE agents to track and target us."

As Mallory Littlejohn from the Chicago Alliance Against Sexual Exploitation put it: "We can change our names and addresses to shield our whereabouts and identities from stalkers and abusive partners, but we can't change our faces."

"Clearview's practices," she said, "put survivors in constant fear of being tracked by those who seek to harm them, and are a threat to our security, safety, and well-being."

Dear Common Dreams reader, The U.S. is on a fast track to authoritarianism like nothing I've ever seen. Meanwhile, corporate news outlets are utterly capitulating to Trump, twisting their coverage to avoid drawing his ire while lining up to stuff cash in his pockets. That's why I believe that Common Dreams is doing the best and most consequential reporting that we've ever done. Our small but mighty team is a progressive reporting powerhouse, covering the news every day that the corporate media never will. Our mission has always been simple: To inform. To inspire. And to ignite change for the common good. Now here's the key piece that I want all our readers to understand: None of this would be possible without your financial support. That's not just some fundraising cliche. It's the absolute and literal truth. We don't accept corporate advertising and never will. We don't have a paywall because we don't think people should be blocked from critical news based on their ability to pay. Everything we do is funded by the donations of readers like you. Will you donate now to help power the nonprofit, independent reporting of Common Dreams? Thank you for being a vital member of our community. Together, we can keep independent journalism alive when it’s needed most. - Craig Brown, Co-founder |

The ACLU and law firm Edelson PC on Thursday filed a lawsuit in Illinois state court to end the "unlawful, privacy-destroying surveillance activities" of Clearview AI, a U.S.-based facial recognition technology startup that has contracted with hundreds of law enforcement agencies across the country.

"Companies like Clearview will end privacy as we know it, and must be stopped."

--Nathan Freed Wessler, ACLU

"Companies like Clearview will end privacy as we know it, and must be stopped," declared Nathan Freed Wessler, senior staff attorney with the ACLU's Speech, Privacy, and Technology Project.

"The ACLU is taking its fight to defend privacy rights against the growing threat of this unregulated surveillance technology to the courts," he said, "even as we double down on our work in legislatures and city councils nationwide."

The suit was filed in the Circuit Court of Cook County on behalf of the national ACLU, the ACLU of Illinois, the Chicago Alliance Against Sexual Exploitation, the Sex Workers Outreach Project, the Illinois State Public Interest Research Group (PIRG), and Mujeres Latinas en Accion. As Freed Wessler wrote in an ACLU blog post about the case:

Face recognition technology offers a surveillance capability unlike any other technology in the past. It makes it dangerously easy to identify and track us at protests, AA meetings, counseling sessions, political rallies, religious gatherings, and more. For our clients--organizations that serve survivors of domestic violence and sexual assault, undocumented immigrants, and people of color--this surveillance system is dangerous and even life-threatening. It empowers abusive ex-partners and serial harassers, exploitative companies, and ICE agents to track and target domestic violence and sexual assault survivors, undocumented immigrants, and other vulnerable communities.

The complaint accuses Clearview AI of violating Illinois residents' privacy rights under the state's Biometric Information Privacy Act (BIPA). The 2008 Illinois law requires companies to notify and obtain written consent from state residents before collecting or obtaining their biometric information or identifiers, which BIPA defines as "a retina or iris scan, fingerprint, voiceprint, or scan of hand or face geometry." Many activists and experts consider the law in Illinois to be the country's toughest state biometric privacy standard.

"Clearview is violating the privacy rights of Illinois residents at a staggering scale," said Rebecca Glenberg, senior staff counsel with the ACLU of Illinois. "Clearview's practices are exactly the kind of threat to privacy that the Illinois legislature intended to address through BIPA, and demonstrate why states across the country should adopt legal protections like the ones in Illinois."

Edelson PC is known nationally for consumer privacy litigation and filing the first cases under BIPA. Lead counsel Jay Edelson warned Thursday that "Clearview's actions represent one of the largest threats to personal privacy by a private company our country has faced. If a well-funded, politically connected company can simply amass information to track all of us, we are living in a different America."

Clearview AI, founded by Vietnamese-Australian serial entrepreneur Hoan Ton-That, has faced scrutiny since a January New York Times expose--entitled "The Secretive Company That Might End Privacy as We Know It"--detailed the startup's activities:

His tiny company, Clearview AI, devised a groundbreaking facial recognition app. You take a picture of a person, upload it, and get to see public photos of that person, along with links to where those photos appeared. The system--whose backbone is a database of more than three billion images that Clearview claims to have scraped from Facebook, YouTube, Venmo and millions of other websites--goes far beyond anything ever constructed by the United States government or Silicon Valley giants.

Federal and state law enforcement officers said that while they had only limited knowledge of how Clearview works and who is behind it, they had used its app to help solve shoplifting, identity theft, credit card fraud, murder, and child sexual exploitation cases.

Clearview AI told the Times that over 600 law enforcement agencies had used the company's controversial facial recognition technology in the past year but declined to provide a list. The Chicago Sun-Times noted Thursday in reporting on the new lawsuit that the Chicago Police Department is among those agencies.

Referencing the Times report in a statement announcing the suit Thursday, the ACLU said that "the company's actions embodied the nightmare scenario privacy advocates long warned of, and accomplished what many companies--such as Google--refused to try due to ethical concerns."

Representatives from groups named as plaintiffs in the case explained how the communities they support are particularly threatened by facial recognition tools. Kathryn Rosenfeld, a member of the Sex Workers Outreach Project-Chicago Leadership Board, said that "as members of a criminalized population and profession who additionally battle social stigma and economic marginalization, sex workers' ability to maintain anonymity is crucial to personal safety both on and off the job."

"Sex workers are already extremely vulnerable to violence and persecution by law enforcement, clientele, would-be economic exploiters, and members of the general public," Rosenfeld added. "The ability of these individuals to track and target us using facial recognition technology further threatens our community's ability to earn our livelihoods safely."

Linda Xochitl Tortolero is president and CEO of Mujeres Latinas en Accion, a nonprofit that provides services to survivors of domestic violence and sexual assault, and undocumented immigrants.

"For many Latinas and survivors, this technology isn't just unnerving, it's dangerous, even life-threatening," said Xochitl Tortolero. "It gives free rein to stalkers and abusive ex-partners, predatory companies, and ICE agents to track and target us."

As Mallory Littlejohn from the Chicago Alliance Against Sexual Exploitation put it: "We can change our names and addresses to shield our whereabouts and identities from stalkers and abusive partners, but we can't change our faces."

"Clearview's practices," she said, "put survivors in constant fear of being tracked by those who seek to harm them, and are a threat to our security, safety, and well-being."

The ACLU and law firm Edelson PC on Thursday filed a lawsuit in Illinois state court to end the "unlawful, privacy-destroying surveillance activities" of Clearview AI, a U.S.-based facial recognition technology startup that has contracted with hundreds of law enforcement agencies across the country.

"Companies like Clearview will end privacy as we know it, and must be stopped."

--Nathan Freed Wessler, ACLU

"Companies like Clearview will end privacy as we know it, and must be stopped," declared Nathan Freed Wessler, senior staff attorney with the ACLU's Speech, Privacy, and Technology Project.

"The ACLU is taking its fight to defend privacy rights against the growing threat of this unregulated surveillance technology to the courts," he said, "even as we double down on our work in legislatures and city councils nationwide."

The suit was filed in the Circuit Court of Cook County on behalf of the national ACLU, the ACLU of Illinois, the Chicago Alliance Against Sexual Exploitation, the Sex Workers Outreach Project, the Illinois State Public Interest Research Group (PIRG), and Mujeres Latinas en Accion. As Freed Wessler wrote in an ACLU blog post about the case:

Face recognition technology offers a surveillance capability unlike any other technology in the past. It makes it dangerously easy to identify and track us at protests, AA meetings, counseling sessions, political rallies, religious gatherings, and more. For our clients--organizations that serve survivors of domestic violence and sexual assault, undocumented immigrants, and people of color--this surveillance system is dangerous and even life-threatening. It empowers abusive ex-partners and serial harassers, exploitative companies, and ICE agents to track and target domestic violence and sexual assault survivors, undocumented immigrants, and other vulnerable communities.

The complaint accuses Clearview AI of violating Illinois residents' privacy rights under the state's Biometric Information Privacy Act (BIPA). The 2008 Illinois law requires companies to notify and obtain written consent from state residents before collecting or obtaining their biometric information or identifiers, which BIPA defines as "a retina or iris scan, fingerprint, voiceprint, or scan of hand or face geometry." Many activists and experts consider the law in Illinois to be the country's toughest state biometric privacy standard.

"Clearview is violating the privacy rights of Illinois residents at a staggering scale," said Rebecca Glenberg, senior staff counsel with the ACLU of Illinois. "Clearview's practices are exactly the kind of threat to privacy that the Illinois legislature intended to address through BIPA, and demonstrate why states across the country should adopt legal protections like the ones in Illinois."

Edelson PC is known nationally for consumer privacy litigation and filing the first cases under BIPA. Lead counsel Jay Edelson warned Thursday that "Clearview's actions represent one of the largest threats to personal privacy by a private company our country has faced. If a well-funded, politically connected company can simply amass information to track all of us, we are living in a different America."

Clearview AI, founded by Vietnamese-Australian serial entrepreneur Hoan Ton-That, has faced scrutiny since a January New York Times expose--entitled "The Secretive Company That Might End Privacy as We Know It"--detailed the startup's activities:

His tiny company, Clearview AI, devised a groundbreaking facial recognition app. You take a picture of a person, upload it, and get to see public photos of that person, along with links to where those photos appeared. The system--whose backbone is a database of more than three billion images that Clearview claims to have scraped from Facebook, YouTube, Venmo and millions of other websites--goes far beyond anything ever constructed by the United States government or Silicon Valley giants.

Federal and state law enforcement officers said that while they had only limited knowledge of how Clearview works and who is behind it, they had used its app to help solve shoplifting, identity theft, credit card fraud, murder, and child sexual exploitation cases.

Clearview AI told the Times that over 600 law enforcement agencies had used the company's controversial facial recognition technology in the past year but declined to provide a list. The Chicago Sun-Times noted Thursday in reporting on the new lawsuit that the Chicago Police Department is among those agencies.

Referencing the Times report in a statement announcing the suit Thursday, the ACLU said that "the company's actions embodied the nightmare scenario privacy advocates long warned of, and accomplished what many companies--such as Google--refused to try due to ethical concerns."

Representatives from groups named as plaintiffs in the case explained how the communities they support are particularly threatened by facial recognition tools. Kathryn Rosenfeld, a member of the Sex Workers Outreach Project-Chicago Leadership Board, said that "as members of a criminalized population and profession who additionally battle social stigma and economic marginalization, sex workers' ability to maintain anonymity is crucial to personal safety both on and off the job."

"Sex workers are already extremely vulnerable to violence and persecution by law enforcement, clientele, would-be economic exploiters, and members of the general public," Rosenfeld added. "The ability of these individuals to track and target us using facial recognition technology further threatens our community's ability to earn our livelihoods safely."

Linda Xochitl Tortolero is president and CEO of Mujeres Latinas en Accion, a nonprofit that provides services to survivors of domestic violence and sexual assault, and undocumented immigrants.

"For many Latinas and survivors, this technology isn't just unnerving, it's dangerous, even life-threatening," said Xochitl Tortolero. "It gives free rein to stalkers and abusive ex-partners, predatory companies, and ICE agents to track and target us."

As Mallory Littlejohn from the Chicago Alliance Against Sexual Exploitation put it: "We can change our names and addresses to shield our whereabouts and identities from stalkers and abusive partners, but we can't change our faces."

"Clearview's practices," she said, "put survivors in constant fear of being tracked by those who seek to harm them, and are a threat to our security, safety, and well-being."