Citing Moral and Legal Void, Rights Groups Demand Preemptive Ban on 'Killer Robots'

Fully autonomous weapons could allow manufacturers, military to escape liability for wrongful deaths

Fully autonomous weapons, or "killer robots," present a legal and ethical quagmire and must be banned before they can be further developed, a new human rights report published Thursday urges ahead of next week's United Nations meeting on lethal weapons.

The report, titled Mind the Gap: The Lack of Accountability for Killer Robots, was jointly published by Human Rights Watch and Harvard Law School's International Human Rights Clinic and outlines the "serious moral and legal concerns" presented by the weapons, which would "possess the ability to select and engage their targets without meaningful human control."

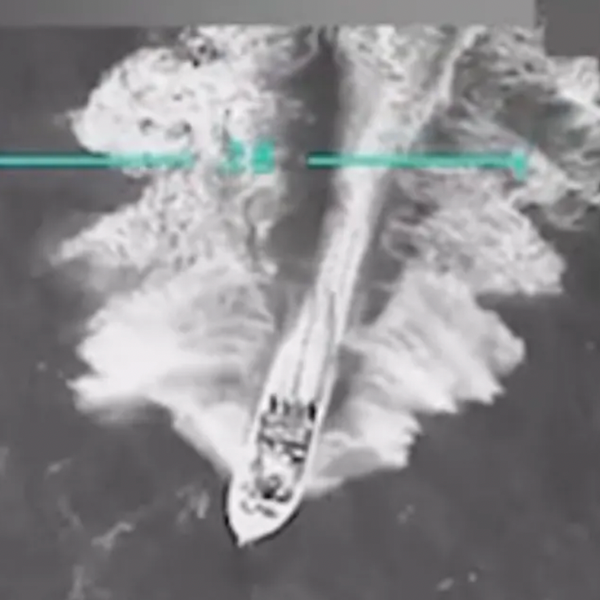

Although fully autonomous weapons do not yet exist, their "precursors" are already in use, such as the Iron Dome in Israel and the Phalanx CIWS in the U.S., the report states.

Under current law, the makers and users of killer robots could get away with unlawful deaths and injuries if the weapons are allowed to develop. Allowing weapons that operate without human control to make decisions about the use of lethal force could lead to violations of international law and make it difficult to hold anyone accountable for those crimes. Moreover, civil liability would be "virtually impossible, at least in the United States," the report found.

"No accountability means no deterrence of future crimes, no retribution for victims, no social condemnation of the responsible party," lead author and HRW Arms Division researcher Bonnie Docherty said in a press release on Thursday. "The many obstacles to justice for potential victims show why we urgently need to ban fully autonomous weapons."

The UN will discuss the killer robots and more conventional arms at its upcoming meeting on inhumane weapons in Geneva, Switzerland from April 13-17. In the past, the UN has used the gathering to preemptively ban military tools such as blinding lasers (pdf).

The report calls on the UN to make a similar call on fully autonomous weapons, stating:

In order to preempt the accountability gap that would arise if fully autonomous weapons were manufactured and deployed, Human Rights Watch and Harvard Law School's International Human Rights Clinic (IHRC) recommend that states:

- Prohibit the development, production, and use of fully autonomous weapons through an international legally binding instrument.

- Adopt national laws and policies that prohibit the development, production, and use of fully autonomous weapons

Docherty concluded, "The lack of accountability adds to the legal, moral, and technological case against fully autonomous weapons and bolsters the call for a preemptive ban."

An Urgent Message From Our Co-Founder

Dear Common Dreams reader, The U.S. is on a fast track to authoritarianism like nothing I've ever seen. Meanwhile, corporate news outlets are utterly capitulating to Trump, twisting their coverage to avoid drawing his ire while lining up to stuff cash in his pockets. That's why I believe that Common Dreams is doing the best and most consequential reporting that we've ever done. Our small but mighty team is a progressive reporting powerhouse, covering the news every day that the corporate media never will. Our mission has always been simple: To inform. To inspire. And to ignite change for the common good. Now here's the key piece that I want all our readers to understand: None of this would be possible without your financial support. That's not just some fundraising cliche. It's the absolute and literal truth. We don't accept corporate advertising and never will. We don't have a paywall because we don't think people should be blocked from critical news based on their ability to pay. Everything we do is funded by the donations of readers like you. Will you donate now to help power the nonprofit, independent reporting of Common Dreams? Thank you for being a vital member of our community. Together, we can keep independent journalism alive when it’s needed most. - Craig Brown, Co-founder |

Fully autonomous weapons, or "killer robots," present a legal and ethical quagmire and must be banned before they can be further developed, a new human rights report published Thursday urges ahead of next week's United Nations meeting on lethal weapons.

The report, titled Mind the Gap: The Lack of Accountability for Killer Robots, was jointly published by Human Rights Watch and Harvard Law School's International Human Rights Clinic and outlines the "serious moral and legal concerns" presented by the weapons, which would "possess the ability to select and engage their targets without meaningful human control."

Although fully autonomous weapons do not yet exist, their "precursors" are already in use, such as the Iron Dome in Israel and the Phalanx CIWS in the U.S., the report states.

Under current law, the makers and users of killer robots could get away with unlawful deaths and injuries if the weapons are allowed to develop. Allowing weapons that operate without human control to make decisions about the use of lethal force could lead to violations of international law and make it difficult to hold anyone accountable for those crimes. Moreover, civil liability would be "virtually impossible, at least in the United States," the report found.

"No accountability means no deterrence of future crimes, no retribution for victims, no social condemnation of the responsible party," lead author and HRW Arms Division researcher Bonnie Docherty said in a press release on Thursday. "The many obstacles to justice for potential victims show why we urgently need to ban fully autonomous weapons."

The UN will discuss the killer robots and more conventional arms at its upcoming meeting on inhumane weapons in Geneva, Switzerland from April 13-17. In the past, the UN has used the gathering to preemptively ban military tools such as blinding lasers (pdf).

The report calls on the UN to make a similar call on fully autonomous weapons, stating:

In order to preempt the accountability gap that would arise if fully autonomous weapons were manufactured and deployed, Human Rights Watch and Harvard Law School's International Human Rights Clinic (IHRC) recommend that states:

- Prohibit the development, production, and use of fully autonomous weapons through an international legally binding instrument.

- Adopt national laws and policies that prohibit the development, production, and use of fully autonomous weapons

Docherty concluded, "The lack of accountability adds to the legal, moral, and technological case against fully autonomous weapons and bolsters the call for a preemptive ban."

Fully autonomous weapons, or "killer robots," present a legal and ethical quagmire and must be banned before they can be further developed, a new human rights report published Thursday urges ahead of next week's United Nations meeting on lethal weapons.

The report, titled Mind the Gap: The Lack of Accountability for Killer Robots, was jointly published by Human Rights Watch and Harvard Law School's International Human Rights Clinic and outlines the "serious moral and legal concerns" presented by the weapons, which would "possess the ability to select and engage their targets without meaningful human control."

Although fully autonomous weapons do not yet exist, their "precursors" are already in use, such as the Iron Dome in Israel and the Phalanx CIWS in the U.S., the report states.

Under current law, the makers and users of killer robots could get away with unlawful deaths and injuries if the weapons are allowed to develop. Allowing weapons that operate without human control to make decisions about the use of lethal force could lead to violations of international law and make it difficult to hold anyone accountable for those crimes. Moreover, civil liability would be "virtually impossible, at least in the United States," the report found.

"No accountability means no deterrence of future crimes, no retribution for victims, no social condemnation of the responsible party," lead author and HRW Arms Division researcher Bonnie Docherty said in a press release on Thursday. "The many obstacles to justice for potential victims show why we urgently need to ban fully autonomous weapons."

The UN will discuss the killer robots and more conventional arms at its upcoming meeting on inhumane weapons in Geneva, Switzerland from April 13-17. In the past, the UN has used the gathering to preemptively ban military tools such as blinding lasers (pdf).

The report calls on the UN to make a similar call on fully autonomous weapons, stating:

In order to preempt the accountability gap that would arise if fully autonomous weapons were manufactured and deployed, Human Rights Watch and Harvard Law School's International Human Rights Clinic (IHRC) recommend that states:

- Prohibit the development, production, and use of fully autonomous weapons through an international legally binding instrument.

- Adopt national laws and policies that prohibit the development, production, and use of fully autonomous weapons

Docherty concluded, "The lack of accountability adds to the legal, moral, and technological case against fully autonomous weapons and bolsters the call for a preemptive ban."